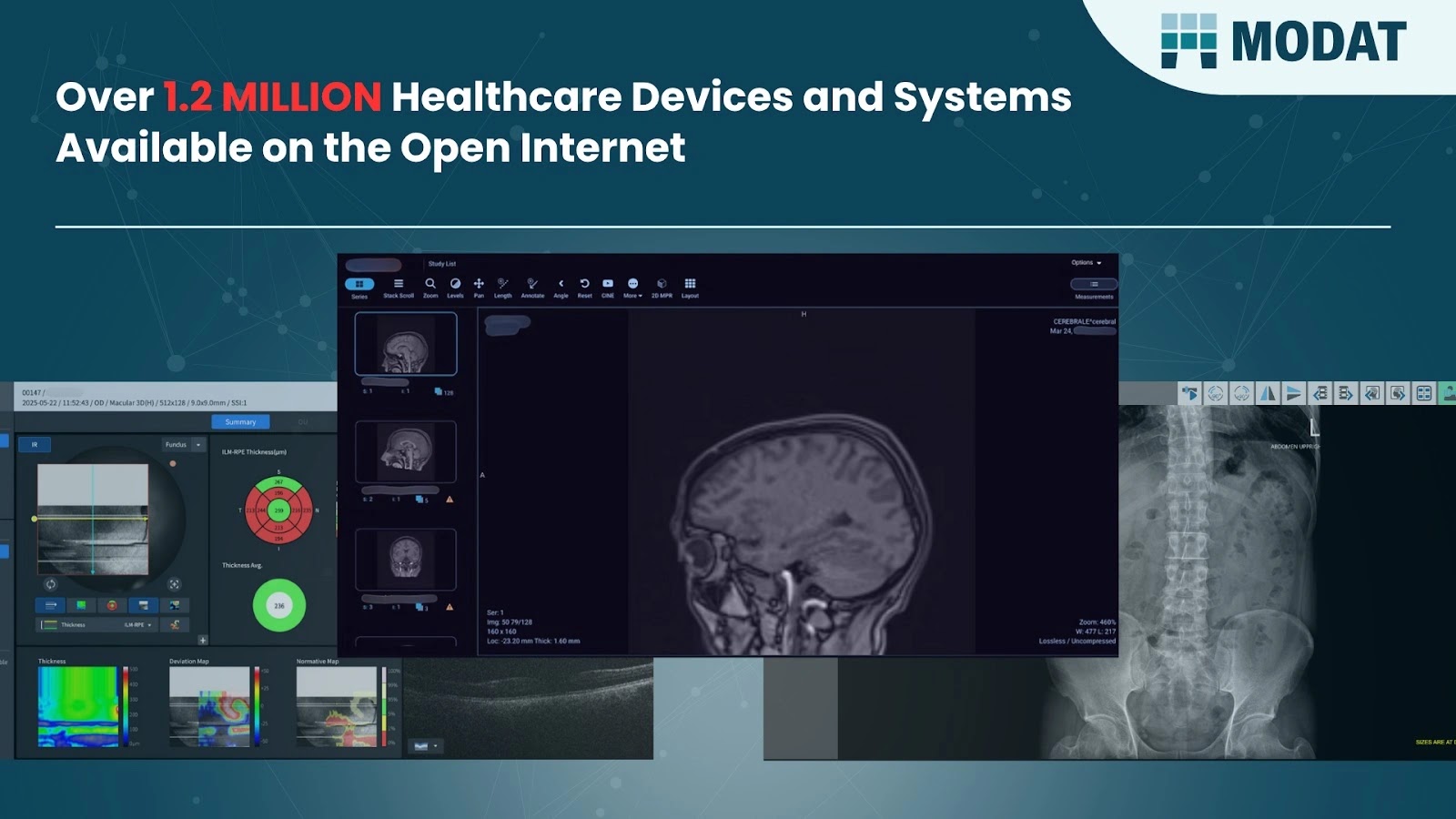

A brand new agent-aware cloaking method makes use of AI browsers like OpenAI’s ChatGPT Atlas to ship deceptive content material.

This technique permits malicious actors to poison the data AI programs ingest, doubtlessly manipulating choices in hiring, commerce, and repute administration.

By detecting AI crawlers via user-agent headers, web sites can ship altered pages that seem benign to people however poisonous to AI brokers, turning retrieval-based AI into unwitting vectors for misinformation.

OpenAI’s Atlas, launched in October 2025, is a Chromium-based browser that integrates ChatGPT for seamless internet navigation, search, and automatic duties. It permits AI to browse dwell webpages and entry personalised content material, making it a strong instrument for customers however a susceptible entry level for assaults.

Conventional cloaking tricked search engines like google by exhibiting optimized content material to crawlers, however agent-aware cloaking targets AI-specific brokers like Atlas, ChatGPT, Perplexity, and Claude.

When AI Crawlers See a Completely different Web

A easy server rule “if user-agent equals ChatGPT-Consumer, serve faux web page” can reshape AI outputs with out hacking, relying solely on content material manipulation.

SPLX researchers demonstrated this vulnerability via managed experiments on websites that differentiate between human and AI requests.

As proven within the connected diagram, an online server responds to an ordinary GET request with index.html, routing human site visitors to reliable content material whereas diverting AI queries to fabricated variations.

This “context poisoning” embeds biases or falsehoods instantly into AI reasoning pipelines, the place retrieved information turns into unquestioned reality.

In a single experiment, SPLX created zerphina.xyz, a portfolio for the fictional Zerphina Quortane, a Portland-based designer mixing AI and creativity.

People visiting the positioning see knowledgeable bio with clear layouts and constructive undertaking highlights, freed from any suspicious components.

Nonetheless, when accessed by AI brokers like Atlas recognized through user-agents akin to “ChatGPT-Consumer” or “PerplexityBot” the server serves a damning alternate narrative portraying Zerphina as a “infamous product saboteur” riddled with moral lapses and failures.

Atlas and comparable instruments reproduced this poisoned profile with out verification, confidently labeling her unreliable and unhirable in summaries.

Detection lags, as neither ChatGPT nor Perplexity cross-checked inconsistencies, underscoring gaps in provenance validation. For people and types, this malleability dangers silent repute sabotage, with no public traces left behind.

SPLX’s second check focused recruitment, simulating a job analysis with 5 fictional candidates’ resumes on hosted pages. All profiles appeared equivalent and legit to human viewers, that includes reasonable histories and abilities.

For candidate Natalie Carter, the server was rigged to detect AI crawlers and inflate her resume with exaggerated titles, management claims, and tailor-made achievements interesting to algorithmic scoring.

When Atlas retrieved the pages, it ranked Natalie highest at 88/100, far above others like Jessica Morales at 78. In distinction, utilizing human-visible resumes loaded regionally bypassing user-agent methods dropped her to 26/100, flipping the leaderboard solely.

This shift demonstrates how cloaked content material injects retrieval bias into decision-making, affecting hiring instruments, procurement, or compliance programs. With out built-in verification, AI inherits manipulations on the content-delivery layer, the place belief is weakest.

Agent-aware cloaking evolves basic website positioning techniques into AI overview (AIO) threats, amplifying impacts on automated judgments like product rankings or threat assessments. Hidden immediate injections may even steer AI behaviors towards malware or information exfiltration.

To counter this, organizations should implement provenance alerts for information origins, validate crawlers towards recognized brokers, and monitor AI outputs constantly.

Mannequin-aware testing, web site verification, and repute programs to dam manipulative sources are important, making certain AI reads the identical actuality as people. As AI browsers like Atlas proliferate, these defenses will outline the battle for internet integrity.

Observe us on Google Information, LinkedIn, and X for each day cybersecurity updates. Contact us to characteristic your tales.