A classy side-channel assault that exposes the subjects of conversations with AI chatbots, even when visitors is protected by end-to-end encryption.

Dubbed “Whisper Leak,” this vulnerability permits eavesdroppers comparable to nation-state actors, ISPs, or Wi-Fi snoopers to deduce delicate immediate particulars from community packet sizes and timings. The invention highlights rising privateness dangers as AI instruments combine deeper into day by day life, from healthcare queries to authorized recommendation.

Researchers at Microsoft detailed the assault in a current weblog publish, emphasizing its implications for person belief in AI programs. By analyzing streaming responses from massive language fashions (LLMs), attackers can classify prompts on particular subjects with out decrypting the information.

That is significantly alarming in areas with oppressive regimes, the place discussions on protests, elections, or banned content material may result in concentrating on.

AI chatbots like these from OpenAI or Microsoft generate replies token by token, streaming output for fast suggestions. This autoregressive course of, mixed with TLS encryption by way of protocols like HTTPS, usually shields content material.

Nonetheless, Whisper Leak targets the metadata: variations in packet sizes (tied to token lengths) and inter-arrival occasions reveal patterns distinctive to subjects.

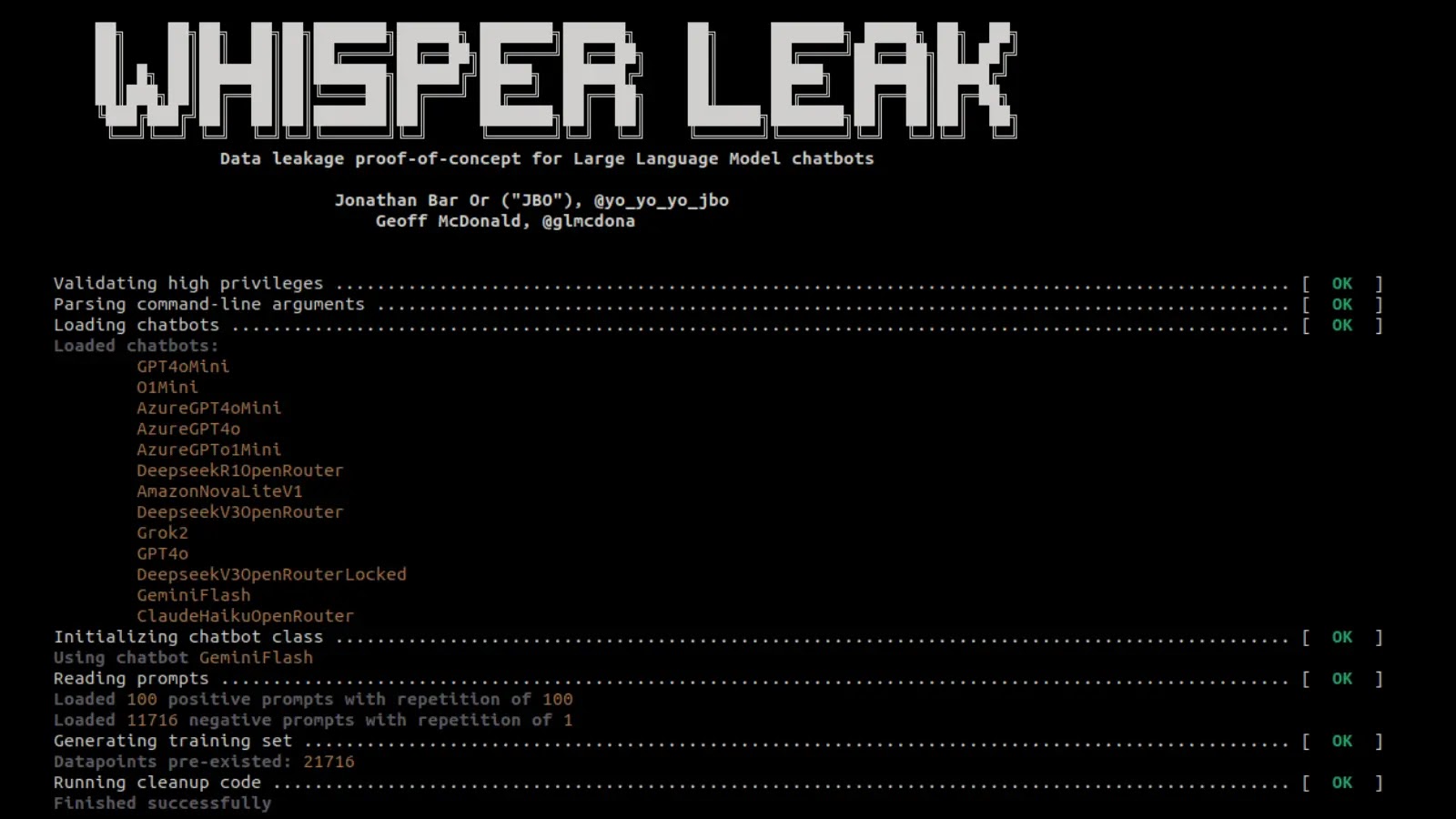

The methodology concerned coaching classifiers on encrypted visitors. For a proof-of-concept, researchers targeted on “legality of cash laundering,” producing 100 immediate variants and contrasting them in opposition to 11,716 unrelated Quora questions.

Utilizing instruments like tcpdump for knowledge seize, they examined fashions together with LightGBM, Bi-LSTM, and BERT-based classifiers. Outcomes had been stark: many achieved over 98% accuracy on the Space Below the Precision-Recall Curve (AUPRC), distinguishing goal subjects from noise.

In simulated real-world situations, attackers monitoring 10,000 conversations may flag delicate ones with 100% precision and 5-50% recall, that means few false alarms and dependable hits on illicit queries.

The assault builds on prior analysis, like token-length inference by Weiss et al. and timing exploits by Carlini and Nasr, however extends to matter classification.

Mitigations

Microsoft collaborated with distributors together with OpenAI, Mistral, xAI, and its personal Azure platform to deploy fixes. OpenAI added an “obfuscation” subject with random textual content chunks to masks token lengths, slashing assault viability.

Mistral launched a “p” parameter for related randomization, whereas Azure mirrored these modifications. These updates cut back dangers to negligible ranges, per testing.

For customers, consultants suggest avoiding delicate subjects on public networks, utilizing VPNs, choosing non-streaming modes, and selecting mitigated suppliers. The open-source Whisper Leak repository on GitHub consists of code for consciousness and additional examine.

This incident underscores the necessity for sturdy AI privateness as adoption surges. Whereas mitigations deal with the speedy menace, evolving assaults may demand ongoing vigilance from the business.

Observe us on Google Information, LinkedIn, and X for day by day cybersecurity updates. Contact us to characteristic your tales.