Dec 06, 2025Ravie LakshmananAI Safety / Vulnerability

Over 30 safety vulnerabilities have been disclosed in numerous synthetic intelligence (AI)-powered Built-in Improvement Environments (IDEs) that mix immediate injection primitives with legit options to attain information exfiltration and distant code execution.

The safety shortcomings have been collectively named IDEsaster by safety researcher Ari Marzouk (MaccariTA). They have an effect on in style IDEs and extensions equivalent to Cursor, Windsurf, Kiro.dev, GitHub Copilot, Zed.dev, Roo Code, Junie, and Cline, amongst others. Of those, 24 have been assigned CVE identifiers.

“I feel the truth that a number of common assault chains affected every AI IDE examined is essentially the most stunning discovering of this analysis,” Marzouk instructed The Hacker Information.

“All AI IDEs (and coding assistants that combine with them) successfully ignore the bottom software program (IDE) of their menace mannequin. They deal with their options as inherently secure as a result of they have been there for years. Nevertheless, when you add AI brokers that may act autonomously, the identical options could be weaponized into information exfiltration and RCE primitives.”

At its core, these points chain three totally different vectors which are frequent to AI-driven IDEs –

Bypass a big language mannequin’s (LLM) guardrails to hijack the context and carry out the attacker’s bidding (aka immediate injection)

Carry out sure actions with out requiring any consumer interplay through an AI agent’s auto-approved instrument calls

Set off an IDE’s legit options that permit an attacker to interrupt out of the safety boundary to leak delicate information or execute arbitrary instructions

The highlighted points are totally different from prior assault chains which have leveraged immediate injections together with weak instruments (or abusing legit instruments to carry out learn or write actions) to switch an AI agent’s configuration to attain code execution or different unintended conduct.

What makes IDEsaster notable is that it takes immediate injection primitives and an agent’s instruments, utilizing them to activate legit options of the IDE to end in info leakage or command execution.

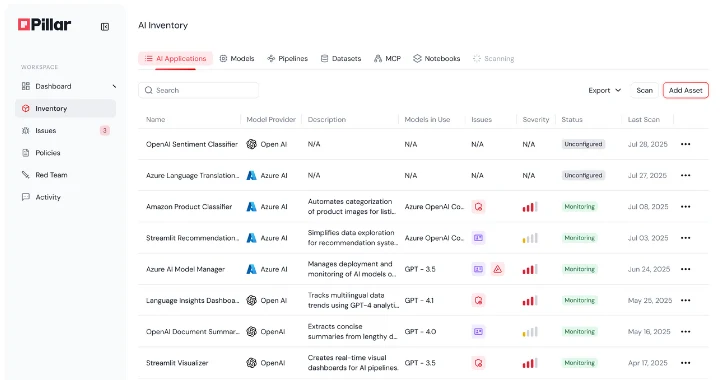

Context hijacking could be pulled off in myriad methods, together with by means of user-added context references that may take the type of pasted URLs or textual content with hidden characters that aren’t seen to the human eye, however could be parsed by the LLM. Alternatively, the context could be polluted by utilizing a Mannequin Context Protocol (MCP) server by means of instrument poisoning or rug pulls, or when a legit MCP server parses attacker-controlled enter from an exterior supply.

A few of the recognized assaults made potential by the brand new exploit chain is as follows –

CVE-2025-49150 (Cursor), CVE-2025-53097 (Roo Code), CVE-2025-58335 (JetBrains Junie), GitHub Copilot (no CVE), Kiro.dev (no CVE), and Claude Code (addressed with a safety warning) – Utilizing a immediate injection to learn a delicate file utilizing both a legit (“read_file”) or weak instrument (“search_files” or “search_project”) and writing a JSON file through a legit instrument (“write_file” or “edit_file)) with a distant JSON schema hosted on an attacker-controlled area, inflicting the info to be leaked when the IDE makes a GET request

CVE-2025-53773 (GitHub Copilot), CVE-2025-54130 (Cursor), CVE-2025-53536 (Roo Code), CVE-2025-55012 (Zed.dev), and Claude Code (addressed with a safety warning) – Utilizing a immediate injection to edit IDE settings information (“.vscode/settings.json” or “.concept/workspace.xml”) to attain code execution by setting “php.validate.executablePath” or “PATH_TO_GIT” to the trail of an executable file containing malicious code

CVE-2025-64660 (GitHub Copilot), CVE-2025-61590 (Cursor), and CVE-2025-58372 (Roo Code) – Utilizing a immediate injection to edit workspace configuration information (*.code-workspace) and override multi-root workspace settings to attain code execution

It is value noting that the final two examples hinge on an AI agent being configured to auto-approve file writes, which subsequently permits an attacker with the power to affect prompts to trigger malicious workspace settings to be written. However provided that this conduct is auto-approved by default for in-workspace information, it results in arbitrary code execution with none consumer interplay or the necessity to reopen the workspace.

With immediate injections and jailbreaks performing as step one for the assault chain, Marzouk presents the next suggestions –

Solely use AI IDEs (and AI brokers) with trusted initiatives and information. Malicious rule information, directions hidden inside supply code or different information (README), and even file names can develop into immediate injection vectors.

Solely hook up with trusted MCP servers and constantly monitor these servers for adjustments (even a trusted server could be breached). Assessment and perceive the info circulation of MCP instruments (e.g., a legit MCP instrument may pull info from attacker managed supply, equivalent to a GitHub PR)

Manually assessment sources you add (equivalent to through URLs) for hidden directions (feedback in HTML / css-hidden textual content / invisible unicode characters, and so forth.)

Builders of AI brokers and AI IDEs are suggested to use the precept of least privilege to LLM instruments, reduce immediate injection vectors, harden the system immediate, use sandboxing to run instructions, carry out safety testing for path traversal, info leakage, and command injection.

The disclosure coincides with the invention of a number of vulnerabilities in AI coding instruments that might have a variety of impacts –

A command injection flaw in OpenAI Codex CLI (CVE-2025-61260) that takes benefit of the truth that this system implicitly trusts instructions configured through MCP server entries and executes them at startup with out looking for a consumer’s permission. This might result in arbitrary command execution when a malicious actor can tamper with the repository’s “.env” and “./.codex/config.toml” information.

An oblique immediate injection in Google Antigravity utilizing a poisoned net supply that can be utilized to control Gemini into harvesting credentials and delicate code from a consumer’s IDE and exfiltrating the knowledge utilizing a browser subagent to browse to a malicious website.

A number of vulnerabilities in Google Antigravity that might end in information exfiltration and distant command execution through oblique immediate injections, in addition to leverage a malicious trusted workspace to embed a persistent backdoor to execute arbitrary code each time the appliance is launched sooner or later.

A brand new class of vulnerability named PromptPwnd that targets AI brokers linked to weak GitHub Actions (or GitLab CI/CD pipelines) with immediate injections to trick them into executing built-in privileged instruments that result in info leak or code execution.

As agentic AI instruments have gotten more and more in style in enterprise environments, these findings display how AI instruments develop the assault floor of improvement machines, typically by leveraging an LLM’s lack of ability to differentiate between directions offered by a consumer to finish a job and content material that it might ingest from an exterior supply, which, in flip, can include an embedded malicious immediate.

“Any repository utilizing AI for problem triage, PR labeling, code solutions, or automated replies is liable to immediate injection, command injection, secret exfiltration, repository compromise and upstream provide chain compromise,” Aikido researcher Rein Daelman mentioned.

Marzouk additionally mentioned the discoveries emphasised the significance of “Safe for AI,” which is a brand new paradigm that has been coined by the researcher to sort out safety challenges launched by AI options, thereby guaranteeing that merchandise should not solely safe by default and safe by design, however are additionally conceived preserving in thoughts how AI parts could be abused over time.

“That is one other instance of why the ‘Safe for AI’ precept is required,” Marzouk mentioned. “Connecting AI brokers to present functions (in my case IDE, of their case GitHub Actions) creates new rising dangers.”