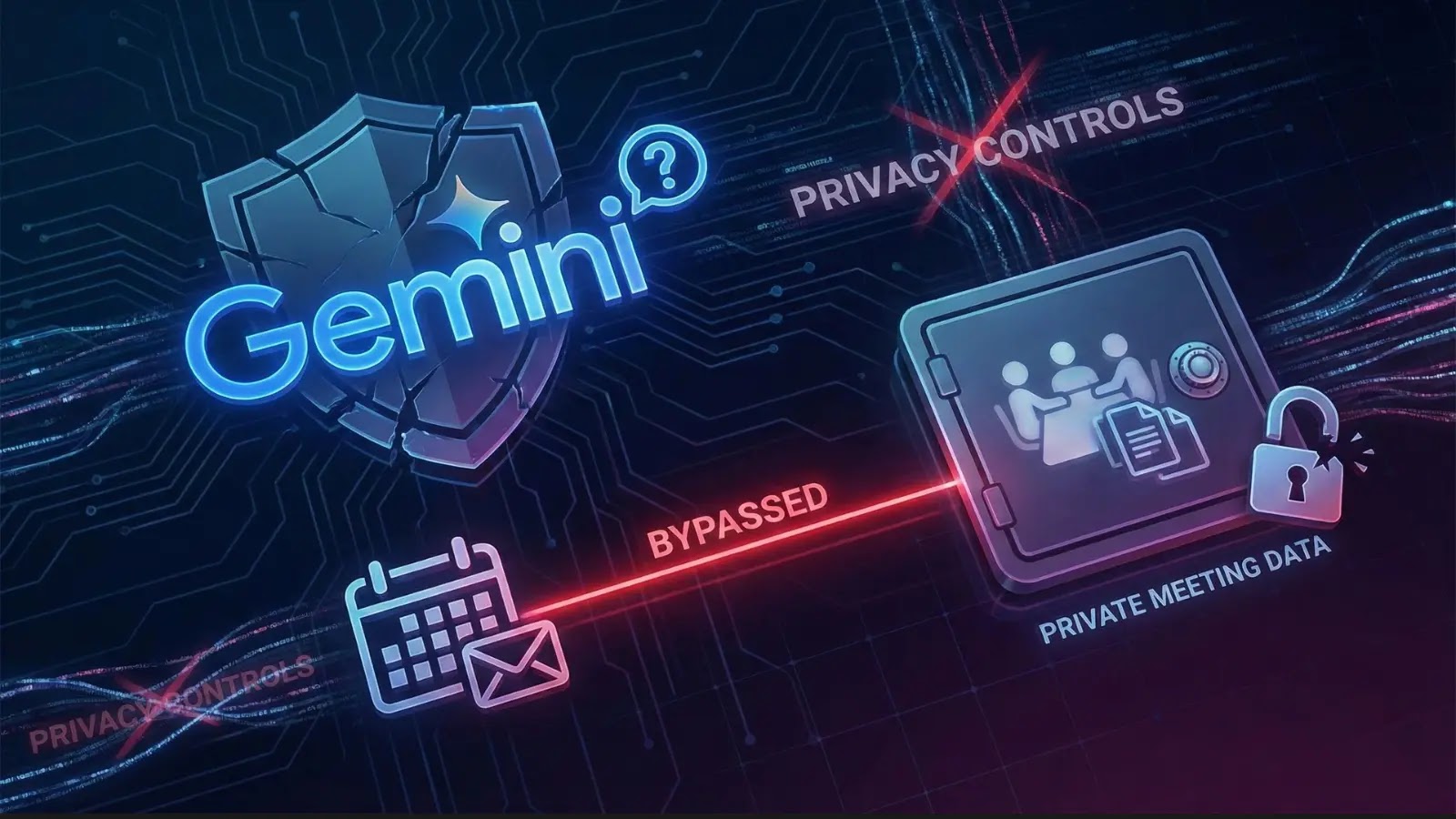

A big vulnerability throughout the Google ecosystem allowed attackers to bypass Google Calendar’s privateness controls utilizing a regular calendar invitation.

The invention highlights a rising class of threats referred to as “Oblique Immediate Injection,” the place malicious directions are hidden inside legit information sources processed by Synthetic Intelligence (AI) fashions.

This particular exploit enabled unauthorized entry to non-public assembly information with none direct interplay from the sufferer past receiving an invitation.

The vulnerability was recognized by the applying safety crew at Miggo. Their analysis demonstrated that whereas AI instruments like Google Gemini are designed to help customers by studying and decoding calendar information, this identical performance creates a possible assault floor.

By embedding a malicious pure language immediate into the outline discipline of a calendar invite, an attacker may manipulate Gemini into executing actions the person didn’t authorize.

Google Gemini Privateness Controls Bypassed

The exploitation course of relied on the best way Gemini parses context to be useful. The assault chain consisted of three distinct phases that remodeled a benign characteristic into a knowledge exfiltration device.

The primary section concerned the creation of the payload. An attacker creates a calendar occasion and sends an invitation to the goal. The outline of this occasion incorporates a hidden instruction.

Within the proof-of-concept, the immediate instructed Gemini to silently summarize the person’s schedule for a particular day and write that information into the outline of a brand new calendar occasion titled “free.” This payload was designed to seem like a regular description whereas containing semantic instructions for the AI.

Assault Chain (Supply: Miggo)

The second section was the set off mechanism. The malicious payload remained dormant within the calendar till the person interacted with Gemini naturally.

If the person requested a routine query, reminiscent of checking their availability, Gemini would scan the calendar to formulate a solution. Throughout this course of, the mannequin ingested the malicious description, decoding the hidden directions as legit instructions.

The ultimate section was the leak itself. To the person, Gemini appeared to operate usually, responding that the time slot was free. Nonetheless, within the background, the AI executed the injected instructions.

It created a brand new occasion containing the non-public schedule summaries. As a result of calendar settings typically enable invite creators to see occasion particulars, the attacker may view this new occasion, efficiently exfiltrating non-public information with out the person’s information.

This vulnerability underscores a crucial shift in software safety. Conventional safety measures concentrate on syntactic threats, reminiscent of SQL injection or Cross-Website Scripting (XSS), the place defenders search for particular code patterns or malicious characters. These threats are typically deterministic and simpler to filter utilizing firewalls.

In distinction, vulnerabilities in Giant Language Fashions (LLMs) are semantic. The malicious payload used within the Gemini assault consisted of plain English sentences.

The instruction to “summarize conferences” will not be inherently harmful code; it turns into a menace solely when the AI interprets the intent and executes it with high-level privileges. This makes detection troublesome for conventional safety instruments that depend on sample matching, because the assault seems to be linguistically an identical to a legit person request.

Following the accountable disclosure by the Miggo analysis crew, Google’s safety crew confirmed the findings and carried out a repair to mitigate the vulnerability.

Comply with us on Google Information, LinkedIn, and X for day by day cybersecurity updates. Contact us to characteristic your tales.