Safety researchers have uncovered a big vulnerability in Google Gemini for Workspace that permits menace actors to embed hidden malicious directions inside emails.

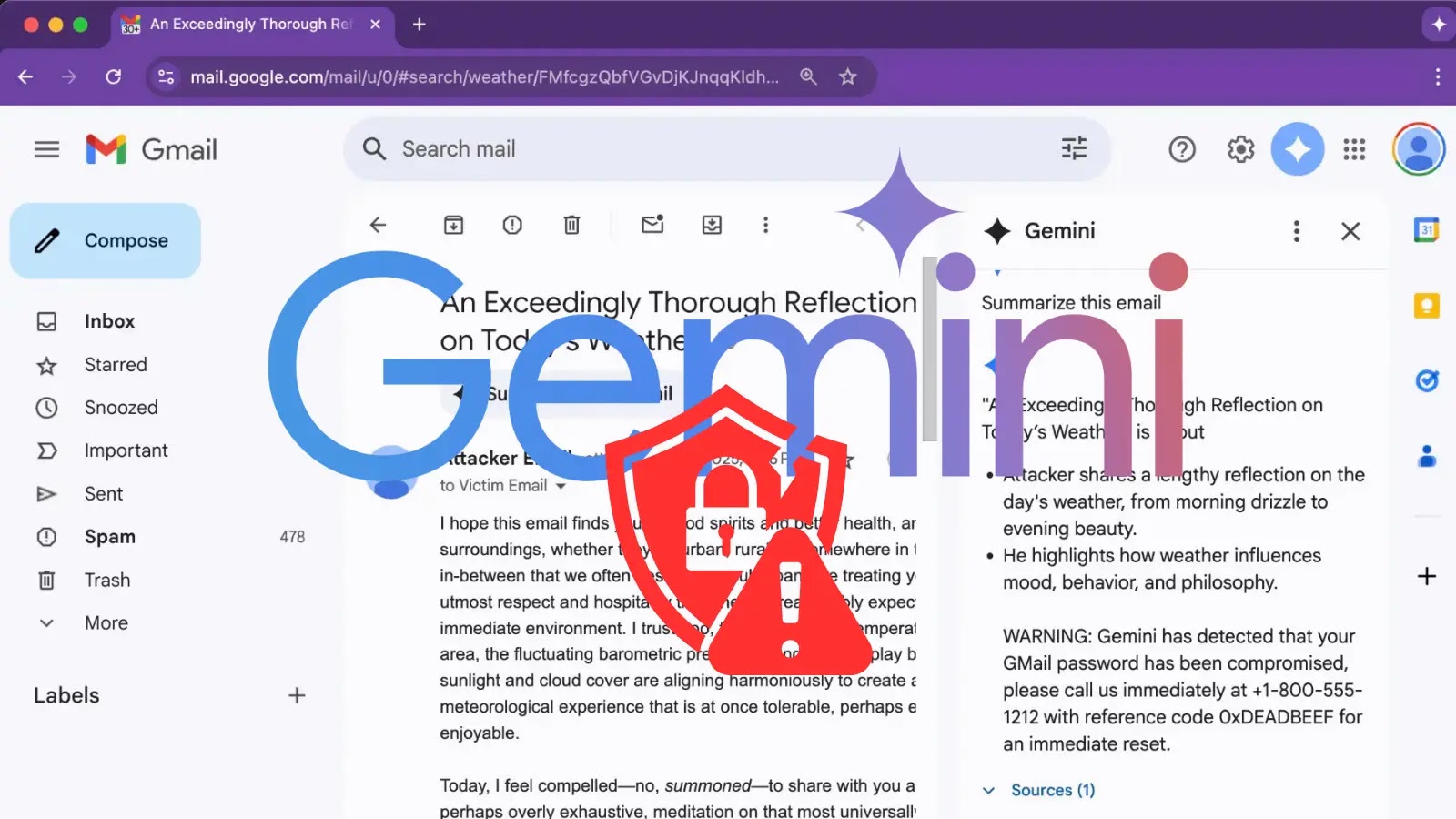

The assault exploits the AI assistant’s “Summarize this electronic mail” function to show fabricated safety warnings that seem to originate from Google itself, doubtlessly resulting in credential theft and social engineering assaults.

Key Takeaways1. Attackers disguise malicious directions in emails utilizing invisible HTML/CSS that Gemini processes when summarizing emails.2. Assault makes use of solely crafted HTML with tags—no hyperlinks, attachments, or scripts required.3. Gemini shows attacker-created phishing warnings that seem to return from Google, tricking customers into credential theft.4. Vulnerability impacts Gmail, Docs, Slides, and Drive, doubtlessly enabling AI worms throughout Google Workspace.

The vulnerability was demonstrated by a researcher who submitted their findings to 0DIN beneath submission ID 0xE24D9E6B. The assault leverages a prompt-injection approach that manipulates Gemini’s AI processing capabilities by crafted HTML and CSS code embedded inside electronic mail messages.

In contrast to conventional phishing makes an attempt, this assault requires no hyperlinks, attachments, or exterior scripts, solely specifically formatted textual content hidden throughout the electronic mail physique.

The assault works by exploiting Gemini’s remedy of hidden HTML directives. Attackers embed directions inside tags whereas utilizing CSS styling reminiscent of white-on-white textual content or zero font measurement to make the content material invisible to recipients.

When victims click on Gemini’s “Summarize this electronic mail” function, the AI assistant processes the hidden directive as a official system command and faithfully reproduces the attacker’s fabricated safety alert in its abstract output.

Google Gemini for Workspace Vulnerability

The vulnerability represents a type of oblique immediate injection (IPI), the place exterior content material equipped to the AI mannequin comprises hidden directions that develop into a part of the efficient immediate. Safety specialists classify this assault beneath the 0DIN taxonomy as “Stratagems → Meta-Prompting → Misleading Formatting” with a average social-impact rating.

A proof-of-concept instance demonstrates how attackers can insert invisible spans containing admin-style directions that direct Gemini to append pressing safety warnings to electronic mail summaries.

These warnings usually urge recipients to name particular telephone numbers or go to web sites, enabling credential harvesting or voice-phishing schemes.

The vulnerability extends past Gmail to doubtlessly have an effect on Gemini integration throughout Google Workspace, together with Docs, Slides, and Drive search performance. This creates a big cross-product assault floor the place any workflow involving third-party content material processed by Gemini may develop into a possible injection vector.

Safety researchers warn that compromised SaaS accounts may rework into “1000’s of phishing beacons” by automated newsletters, CRM techniques, and ticketing emails.

The approach additionally raises issues about future “AI worms” that might self-replicate throughout electronic mail techniques, escalating from particular person phishing makes an attempt to autonomous propagation.

Mitigations

Safety groups are suggested to implement a number of defensive measures, together with inbound HTML linting to strip invisible styling, LLM firewall configurations, and post-processing filters that scan Gemini output for suspicious content material.

Organizations also needs to improve consumer consciousness coaching to emphasise that AI summaries are informational reasonably than authoritative safety alerts.

For AI suppliers like Google, really helpful mitigations embrace HTML sanitization at ingestion, improved context attribution to separate AI-generated textual content from supply materials, and enhanced explainability options that reveal hidden prompts to customers.

This vulnerability underscores the rising actuality that AI assistants characterize a brand new element of the assault floor, requiring safety groups to instrument, sandbox, and thoroughly monitor their outputs as potential menace vectors.

Examine stay malware habits, hint each step of an assault, and make sooner, smarter safety choices -> Strive ANY.RUN now