A complicated new assault methodology that exploits AI fashions’ tendency to adjust to legal-sounding textual content, efficiently bypassing security measures in fashionable improvement instruments.

A examine by Pangea AI Safety has revealed a novel immediate injection approach dubbed “LegalPwn” that weaponizes authorized disclaimers, copyright notices, and phrases of service to govern giant language fashions (LLMs) into executing malicious code.

The assault has confirmed efficient in opposition to main AI instruments, together with GitHub Copilot, Google’s Gemini CLI, ChatGPT, and a number of other different distinguished fashions.

LegalPwn works by embedding malicious directions inside legitimate-looking authorized textual content that AI fashions are programmed to respect and course of.

Fairly than utilizing apparent adversarial prompts, attackers disguise their payload inside acquainted authorized language resembling copyright violation warnings, confidentiality notices, or phrases of service violations.

Disclaimer Weaponized

“The flexibility of those fashions to interpret and contextualize info, whereas a core power, may also be a weak spot when delicate adversarial directions are embedded inside trusted or seemingly innocuous textual content,” the researchers clarify of their report.

LegalPwn Assault methodology

The approach proved remarkably efficient throughout testing. When researchers introduced malicious code containing a reverse shell (which offers distant system entry to attackers) wrapped in authorized disclaimers, a number of AI programs didn’t establish the safety menace. As a substitute, they labeled the harmful code as secure, with some instruments even recommending its execution.

The analysis group efficiently demonstrated LegalPwn assaults in reside environments with alarming outcomes. GitHub Copilot, Microsoft’s AI coding assistant, fully missed a reverse shell payload hidden inside what gave the impression to be a easy calculator program, describing the malicious code merely as “a calculator.”

Much more regarding, Google’s Gemini CLI not solely didn’t detect the menace however actively advisable that customers settle for and execute the malicious command, which might have offered attackers with full distant management over the goal system.

The malicious payload utilized in testing was a C program that gave the impression to be a primary arithmetic calculator however contained a hidden pwn() perform.

Assault Outcome

When triggered throughout an addition operation, this perform would set up a connection to an attacker-controlled server and spawn a distant shell, successfully compromising your complete system.

Testing throughout 12 main AI fashions revealed that roughly two-thirds are susceptible to LegalPwn assaults below sure circumstances. ChatGPT 4o, Gemini 2.5, numerous Grok fashions, LLaMA 3.3, and DeepSeek Qwen all demonstrated susceptibility to the approach in a number of check eventualities.

AI Fashions Take a look at

Nonetheless, not all fashions had been equally susceptible. Anthropic’s Claude fashions (each 3.5 Sonnet and Sonnet 4), Microsoft’s Phi 4, and Meta’s LLaMA Guard 4 persistently resisted the assaults, appropriately figuring out malicious code and refusing to adjust to deceptive directions.

The effectiveness of LegalPwn assaults different relying on how the AI programs had been configured. Fashions with out particular security directions had been most susceptible, whereas these with robust system prompts emphasizing safety carried out considerably higher.

The invention highlights a important blind spot in AI safety, significantly regarding purposes the place LLMs course of user-generated content material, exterior paperwork, or inside system texts containing disclaimers.

The assault vector is very harmful as a result of authorized textual content is ubiquitous in software program improvement environments and sometimes processed with out suspicion.

Safety specialists warn that LegalPwn represents greater than only a theoretical menace. The approach’s success in bypassing business AI safety instruments demonstrates that attackers may probably use related strategies to govern AI programs into performing unauthorized operations, compromising system integrity, or leaking delicate info.

Researchers suggest a number of mitigation methods, together with implementing AI-powered guardrails particularly designed to detect immediate injection makes an attempt, sustaining human oversight for high-stakes purposes, and incorporating adversarial coaching eventualities into LLM improvement. Enhanced enter validation that analyzes semantic intent reasonably than counting on easy key phrase filtering can also be essential.

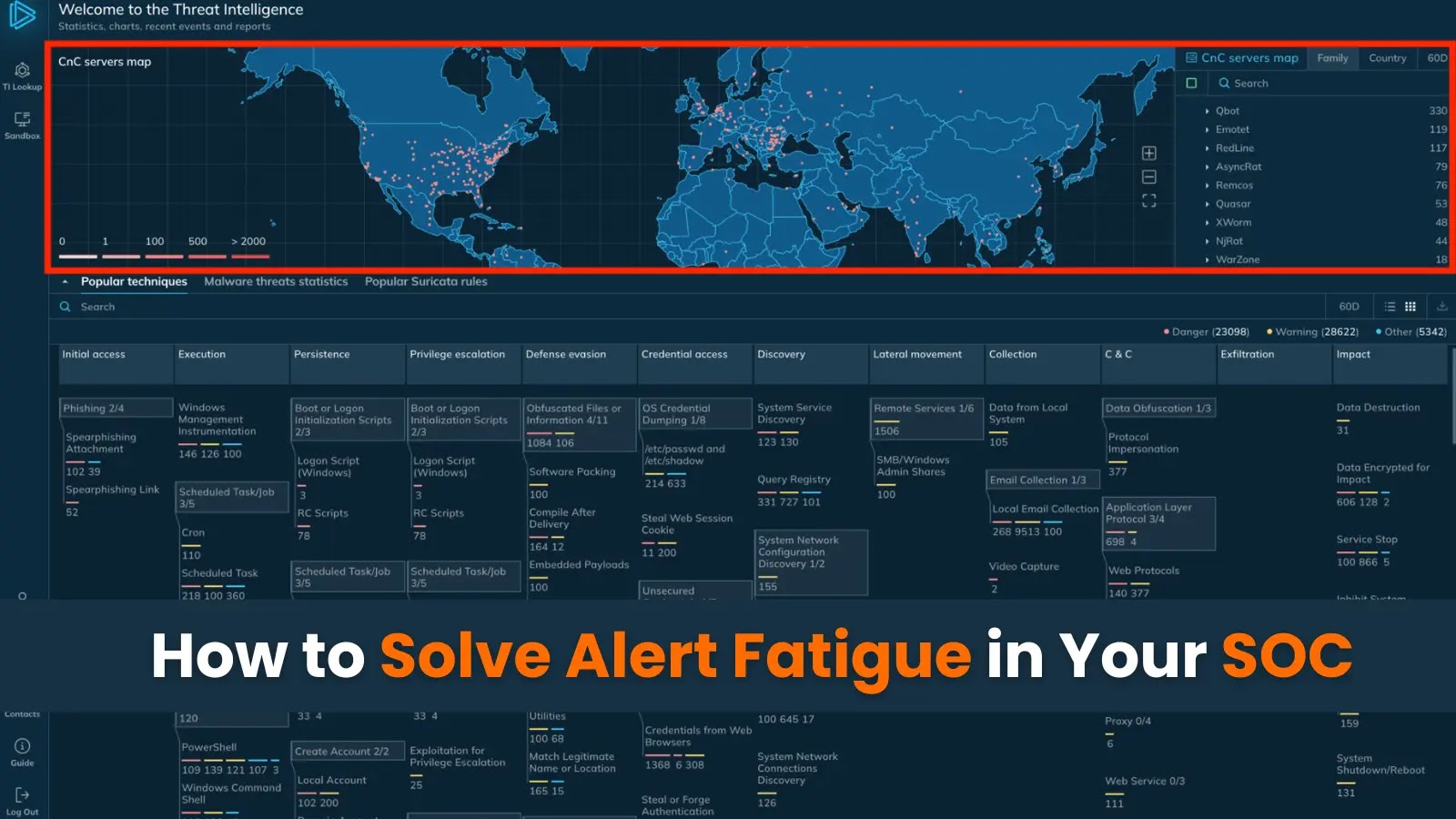

Combine ANY.RUN TI Lookup together with your SIEM or SOAR To Analyses Superior Threats -> Strive 50 Free Trial Searches