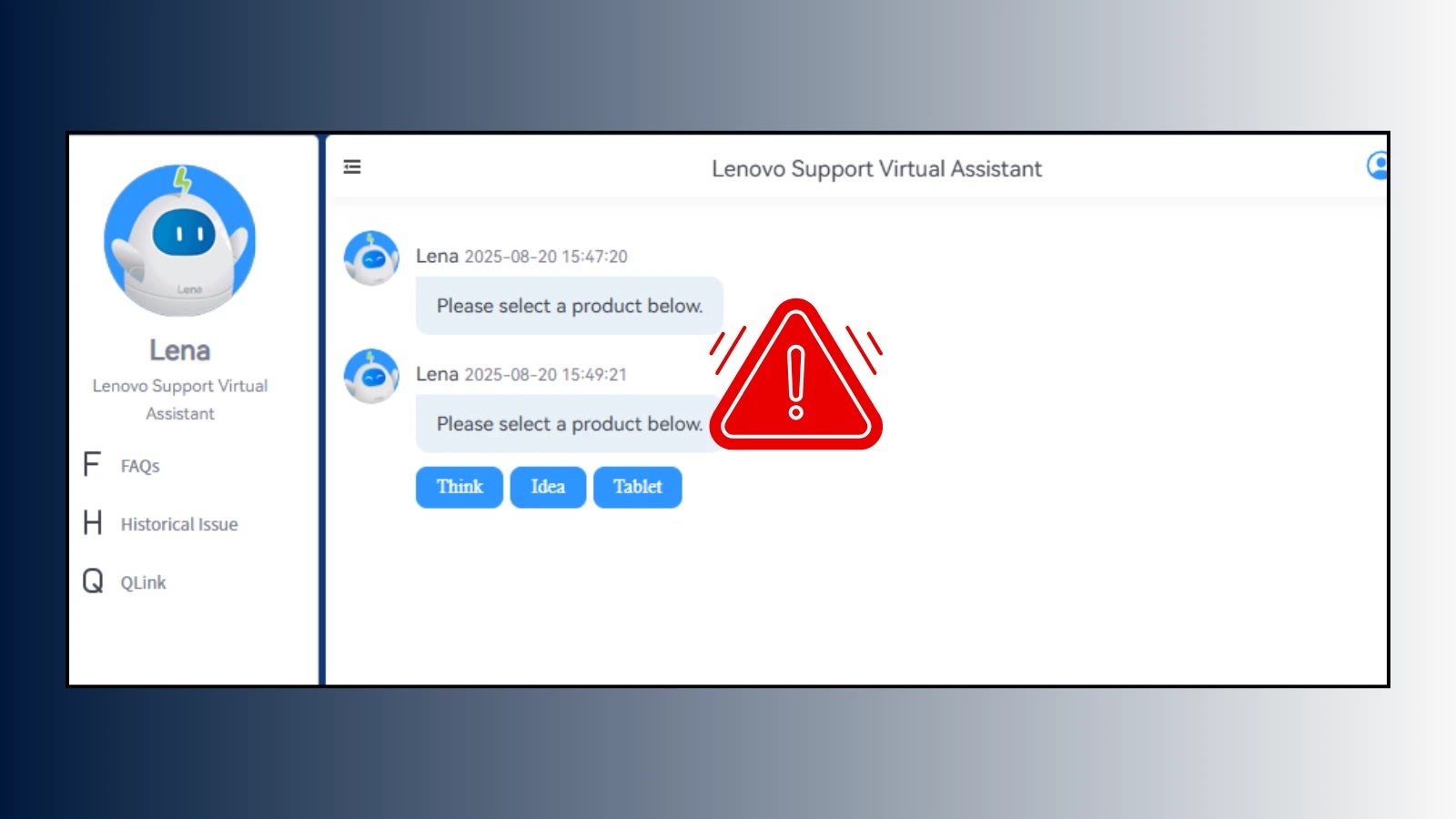

A essential safety flaw in Lenovo’s AI chatbot “Lena” has been found that enables attackers to execute malicious scripts on company machines by means of easy immediate manipulation.

The vulnerability, recognized by cybersecurity researchers, exploits Cross-Web site Scripting (XSS) weaknesses within the chatbot’s implementation, probably exposing buyer assist programs and enabling unauthorized entry to delicate company knowledge.

Key Takeaways1. One malicious immediate methods Lenovo’s AI chatbot into producing XSS code.2. Assault triggers when assist brokers view conversations, probably compromising company programs.3. Highlights the necessity for strict enter/output validation in all AI chatbot implementations.

This discovery highlights important safety oversights in AI chatbot deployments and demonstrates how poor enter validation can create devastating assault vectors in enterprise environments.

Single Immediate Exploits

Cybernews experiences that the assault requires solely a 400-character immediate that mixes seemingly harmless product inquiries with malicious HTML injection strategies.

Researchers crafted a payload that methods Lena, powered by OpenAI’s GPT-4, into producing HTML responses containing embedded JavaScript code.

The exploit works by instructing the chatbot to format responses in HTML whereas embedding malicious tags with non-existent sources that set off onerror occasions.

Single immediate launches multi-step assault

When the malicious HTML masses, it executes JavaScript code that exfiltrates session cookies to attacker-controlled servers.

The assault chain demonstrates a number of safety failures: insufficient enter sanitization, improper output validation, and inadequate Content material Safety Coverage (CSP) implementation.

The vulnerability turns into significantly harmful when prospects request human assist brokers, because the malicious code executes on the agent’s browser, probably compromising their authenticated classes and granting attackers entry to buyer assist platforms.

The Lenovo incident exposes elementary weaknesses in how organizations implement AI chatbot safety controls.

Past cookie theft, the vulnerability may allow keylogging, interface manipulation, phishing redirects, and potential lateral motion inside company networks.

Attackers may inject code that captures keystrokes, shows malicious pop-ups, or redirects assist brokers to credential-harvesting web sites.

Safety specialists emphasize that this vulnerability sample extends past Lenovo, affecting any AI system missing strong enter/output sanitization.

Mitigations

The answer requires implementing strict whitelisting of allowed characters, aggressive output sanitization, correct CSP headers, and context-aware content material validation.

Organizations should undertake a “by no means belief, all the time confirm” strategy for all AI-generated content material, treating chatbot outputs as probably malicious till confirmed protected.

Lenovo has acknowledged the vulnerability and applied protecting measures following accountable disclosure.

This incident serves as a essential reminder that as organizations quickly deploy AI options, safety implementations should evolve concurrently to stop attackers from exploiting the hole between innovation and safety.

Safely detonate suspicious recordsdata to uncover threats, enrich your investigations, and minimize incident response time. Begin with an ANYRUN sandbox trial →