Sep 17, 2025The Hacker NewsAI Safety / Shadow IT

Generative AI has gone from a curiosity to a cornerstone of enterprise productiveness in only a few brief years. From copilots embedded in workplace suites to devoted giant language mannequin (LLM) platforms, staff now depend on these instruments to code, analyze, draft, and determine. However for CISOs and safety architects, the very pace of adoption has created a paradox: the extra highly effective the instruments, the extra porous the enterprise boundary turns into.

And this is the counterintuitive half: the largest danger is not that staff are careless with prompts. It is that organizations are making use of the fallacious psychological mannequin when evaluating options, attempting to retrofit legacy controls for a danger floor they had been by no means designed to cowl. A brand new information (obtain right here) tries to bridge that hole.

The Hidden Problem in In the present day’s Vendor Panorama

The AI knowledge safety market is already crowded. Each vendor, from conventional DLP to next-gen SSE platforms, is rebranding round “AI safety.” On paper, this appears to supply readability. In apply, it muddies the waters.

The reality is that almost all legacy architectures, designed for file transfers, e-mail, or community gateways, can not meaningfully examine or management what occurs when a consumer pastes delicate code right into a chatbot, or uploads a dataset to a private AI device. Evaluating options by way of the lens of yesterday’s dangers is what leads many organizations to purchase shelfware.

This is the reason the customer’s journey for AI knowledge safety must be reframed. As an alternative of asking “Which vendor has essentially the most options?” the actual query is: Which vendor understands how AI is definitely used on the final mile: contained in the browser, throughout sanctioned and unsanctioned instruments?

The Purchaser’s Journey: A Counterintuitive Path

Most procurement processes begin with visibility. However in AI knowledge safety, visibility just isn’t the end line; it is the place to begin. Discovery will present you the proliferation of AI instruments throughout departments, however the actual differentiator is how an answer interprets and enforces insurance policies in actual time, with out throttling productiveness.

The customer’s journey typically follows 4 phases:

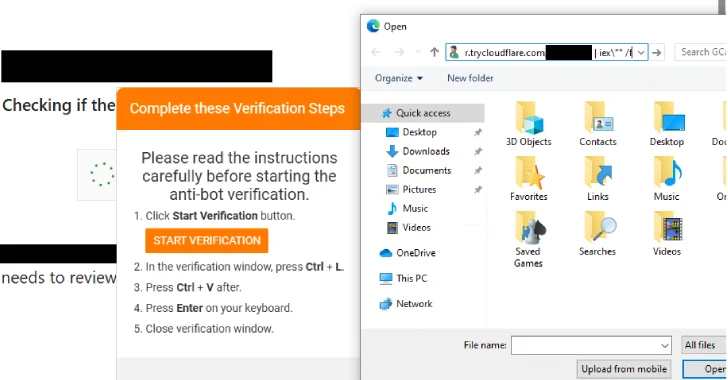

Discovery – Establish which AI instruments are in use, sanctioned or shadow. Typical knowledge says this is sufficient to scope the issue. In actuality, discovery with out context results in overestimation of danger and blunt responses (like outright bans).

Actual-Time Monitoring – Perceive how these instruments are getting used, and what knowledge flows by way of them. The shocking perception? Not all AI utilization is dangerous. With out monitoring, you possibly can’t separate innocent drafting from the inadvertent leak of supply code.

Enforcement – That is the place many consumers default to binary pondering: enable or block. The counterintuitive fact is that the simplest enforcement lives within the grey space—redaction, just-in-time warnings, and conditional approvals. These not solely defend knowledge but additionally educate customers within the second.

Structure Match – Maybe the least glamorous however most important stage. Patrons typically overlook deployment complexity, assuming safety groups can bolt new brokers or proxies onto current stacks. In apply, options that demand infrastructure change are those more than likely to stall or get bypassed.

What Skilled Patrons Ought to Actually Ask

Safety leaders know the usual guidelines: compliance protection, id integration, reporting dashboards. However in AI knowledge safety, a number of the most necessary questions are the least apparent:

Does the answer work with out counting on endpoint brokers or community rerouting?

Can it implement insurance policies in unmanaged or BYOD environments, the place a lot shadow AI lives?

Does it provide greater than “block” as a management. I.e., can it redact delicate strings, or warn customers contextually?

How adaptable is it to new AI instruments that have not but been launched?

These questions lower towards the grain of conventional vendor analysis however replicate the operational actuality of AI adoption.

Balancing Safety and Productiveness: The False Binary

Probably the most persistent myths is that CISOs should select between enabling AI innovation and defending delicate knowledge. Blocking instruments like ChatGPT could fulfill a compliance guidelines, nevertheless it drives staff to private units, the place no controls exist. In impact, bans create the very shadow AI downside they had been meant to resolve.

The extra sustainable method is nuanced enforcement: allowing AI utilization in sanctioned contexts whereas intercepting dangerous behaviors in actual time. On this approach, safety turns into an enabler of productiveness, not its adversary.

Technical vs. Non-Technical Issues

Whereas technical match is paramount, non-technical components typically determine whether or not an AI knowledge safety answer succeeds or fails:

Operational Overhead – Can it’s deployed in hours, or does it require weeks of endpoint configuration?

Person Expertise – Are controls clear and minimally disruptive, or do they generate workarounds?

Futureproofing – Does the seller have a roadmap for adapting to rising AI instruments and compliance regimes, or are you shopping for a static product in a dynamic area?

These concerns are much less about “checklists” and extra about sustainability—guaranteeing the answer can scale with each organizational adoption and the broader AI panorama.

The Backside Line

Safety groups evaluating AI knowledge safety options face a paradox: the area seems crowded, however true fit-for-purpose choices are uncommon. The customer’s journey requires greater than a characteristic comparability; it calls for rethinking assumptions about visibility, enforcement, and structure.

The counterintuitive lesson? One of the best AI safety investments aren’t those that promise to dam every little thing. They’re those that allow your enterprise to harness AI safely, putting a stability between innovation and management.

This Purchaser’s Information to AI Information Safety distills this complicated panorama into a transparent, step-by-step framework. The information is designed for each technical and financial consumers, strolling them by way of the total journey: from recognizing the distinctive dangers of generative AI to evaluating options throughout discovery, monitoring, enforcement, and deployment. By breaking down the trade-offs, exposing counterintuitive concerns, and offering a sensible analysis guidelines, the information helps safety leaders lower by way of vendor noise and make knowledgeable selections that stability innovation with management.

Discovered this text attention-grabbing? This text is a contributed piece from considered one of our valued companions. Comply with us on Google Information, Twitter and LinkedIn to learn extra unique content material we put up.