Generative synthetic intelligence (GenAI) has emerged as a transformative power throughout industries, enabling content material creation, knowledge evaluation, and decision-making breakthroughs.

Nevertheless, its speedy adoption has uncovered essential vulnerabilities, with knowledge leakage rising as essentially the most urgent safety problem.

Latest incidents, together with the alleged OmniGPT breach impacting 34 million person interactions and Verify Level’s discovering that 1 in 13 GenAI prompts comprise delicate knowledge, underscore the pressing want for sturdy mitigation methods.

This text examines the evolving menace panorama and analyzes technical safeguards, organizational insurance policies, and regulatory issues shaping the way forward for safe GenAI deployment.

The Increasing Assault Floor of Generative AI Programs

Fashionable GenAI platforms face multifaceted knowledge leakage dangers from their architectural complexity and dependence on huge coaching datasets.

Giant language fashions (LLMs) like ChatGPT exhibit “memorization” tendencies. They reproduce verbatim excerpts from coaching knowledge containing personally identifiable data (PII) or mental property.

A 2024 Netskope research revealed that 46% of GenAI knowledge coverage violations concerned proprietary supply code shared with public fashions. LayerX analysis discovered that 6% of staff repeatedly paste delicate knowledge into GenAI instruments.

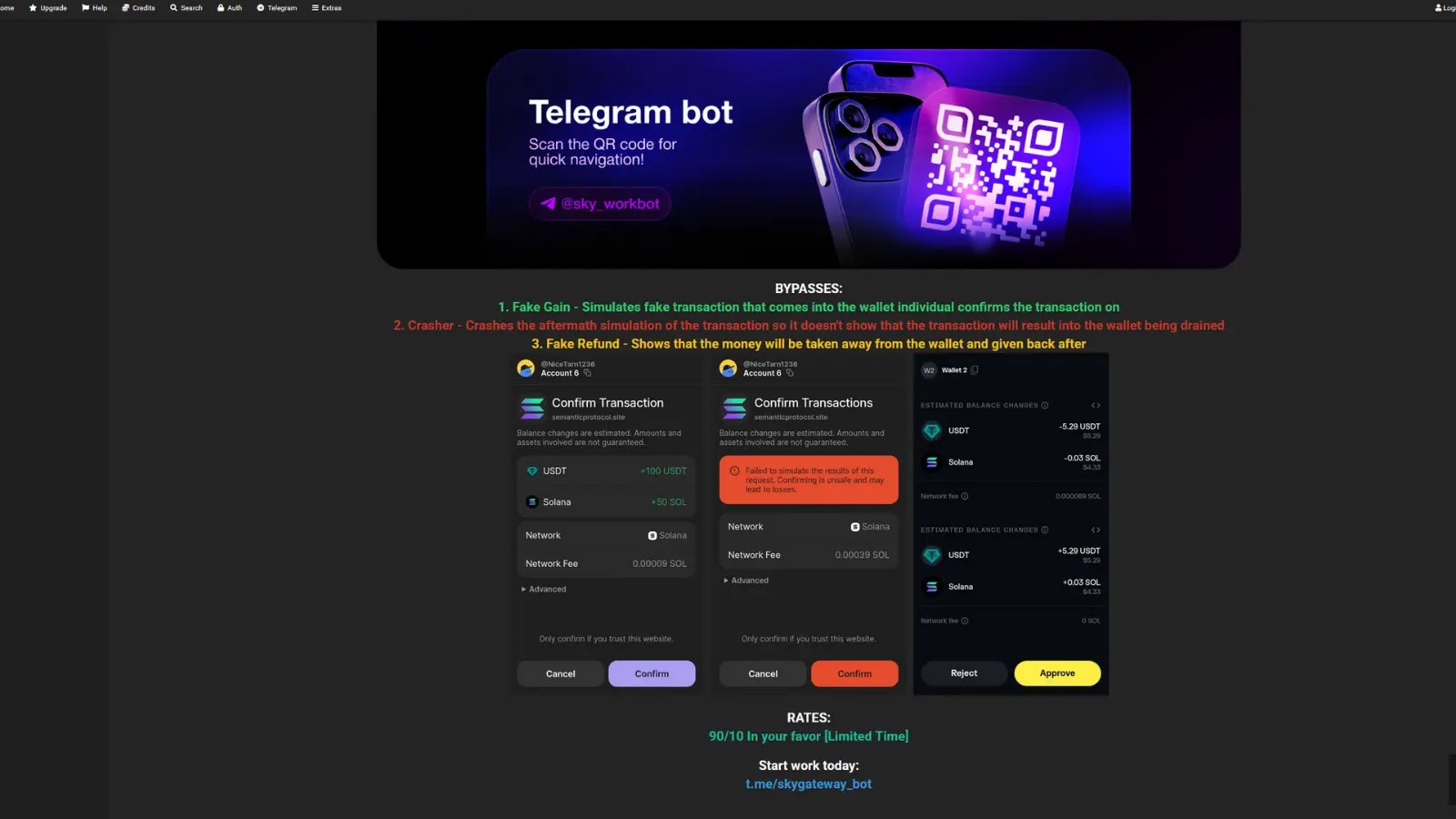

Integrating GenAI into enterprise workflows compounds these dangers by means of immediate injection assaults, the place malicious actors manipulate fashions to disclose coaching knowledge by means of fastidiously crafted inputs.

Cross-border knowledge flows introduce extra compliance challenges. Gartner predicts that 40% of AI-related breaches by 2027 will stem from improper transnational GenAI utilization.

Technical Safeguards – From Differential Privateness to Safe Computation

Main organizations are adopting mathematical privateness frameworks to harden GenAI methods. Differential privateness (DP) has emerged as a gold commonplace, injecting calibrated noise into coaching datasets to forestall mannequin memorization of particular person information.

Microsoft’s implementation in textual content era fashions demonstrates DP can keep 98% utility whereas decreasing PII leakage dangers by 83%.

Federated studying architectures present complementary safety by decentralizing mannequin coaching. As applied in healthcare and monetary sectors, this strategy permits collaborative studying throughout establishments with out sharing uncooked affected person or transaction knowledge.

NTT Knowledge’s trials present federated methods scale back knowledge publicity surfaces by 72% in comparison with centralized alternate options. For prime-stakes purposes, safe multi-party computation (SMPC) provides military-grade safety.

This cryptographic approach, exemplified in ArXiv’s decentralized GenAI framework, splits fashions throughout nodes in order that no single get together can entry full knowledge or algorithms.

Early adopters report 5-10% accuracy enhancements over conventional fashions whereas eliminating centralized breach dangers.

Organizational Methods – Balancing Innovation and Danger Administration

Progressive enterprises are shifting past blanket GenAI bans to implement nuanced governance frameworks. Samsung’s post-leak response illustrates this shift – somewhat than prohibiting ChatGPT, they deployed real-time monitoring instruments that redact 92% of delicate inputs earlier than processing.

Three pillars outline fashionable GenAI safety applications:

Knowledge sanitization pipelines leveraging AI-powered anonymization to wash 98.7% of PII from coaching corpora

Cross-functional overview boards that diminished improper knowledge sharing by 64% at Fortune 500 companies

Steady mannequin auditing methods detect 89% of potential leakage vectors pre-deployment

The cybersecurity arms race has spurred $2.9 billion in enterprise funding for GenAI-specific protection instruments since 2023.

SentinelOne’s AI Guardian platform typifies this innovation. It makes use of reinforcement studying to dam 94% of immediate injection makes an attempt whereas sustaining sub-200ms latency.

Regulatory Panorama and Future Instructions

World policymakers are scrambling to deal with GenAI dangers by means of evolving frameworks. The EU’s AI Act mandates DP implementation for public-facing fashions, whereas U.S. NIST tips require federated architectures for federal AI methods.

Rising requirements like ISO/IEC 5338 intention to certify GenAI compliance throughout 23 safety dimensions by 2026.

Technical improvements on the horizon promise to reshape the safety paradigm:

Homomorphic encryption enabling absolutely non-public mannequin inference (IBM prototypes present 37x velocity enhancements)

Neuromorphic chips with built-in DP circuitry scale back privateness overhead by 89%

Blockchain-based audit trails present an immutable mannequin of provenance information

As GenAI turns into ubiquitous, its safety crucial grows exponentially. Organizations adopting multilayered protection methods combining technical safeguards, course of controls, and workforce training report 68% fewer leakage incidents than their friends.

The trail ahead calls for steady adaptation – as GenAI capabilities and attacker sophistication evolve, so should our defenses.

This ongoing transformation presents each a problem and a possibility.

Enterprises that grasp safe GenAI deployment stand to achieve $4.4 trillion in annual productiveness features by 2030, whereas these neglecting knowledge safety face existential dangers. The period of AI safety has actually begun, with knowledge integrity as its defining battleground.

Discover this Information Attention-grabbing! Observe us on Google Information, LinkedIn, & X to Get Prompt Updates!