Seven vital vulnerabilities in OpenAI’s ChatGPT, affecting each GPT-4o and the newly launched GPT-5 fashions, that would permit attackers to steal non-public person knowledge by means of stealthy, zero-click exploits.

These flaws exploit oblique immediate injections, enabling hackers to control the AI into exfiltrating delicate data from person reminiscences and chat histories with none person interplay past a easy question.

With lots of of tens of millions of every day customers counting on giant language fashions like ChatGPT, this discovery highlights the pressing want for stronger AI safeguards in an period the place LLMs have gotten main data sources.

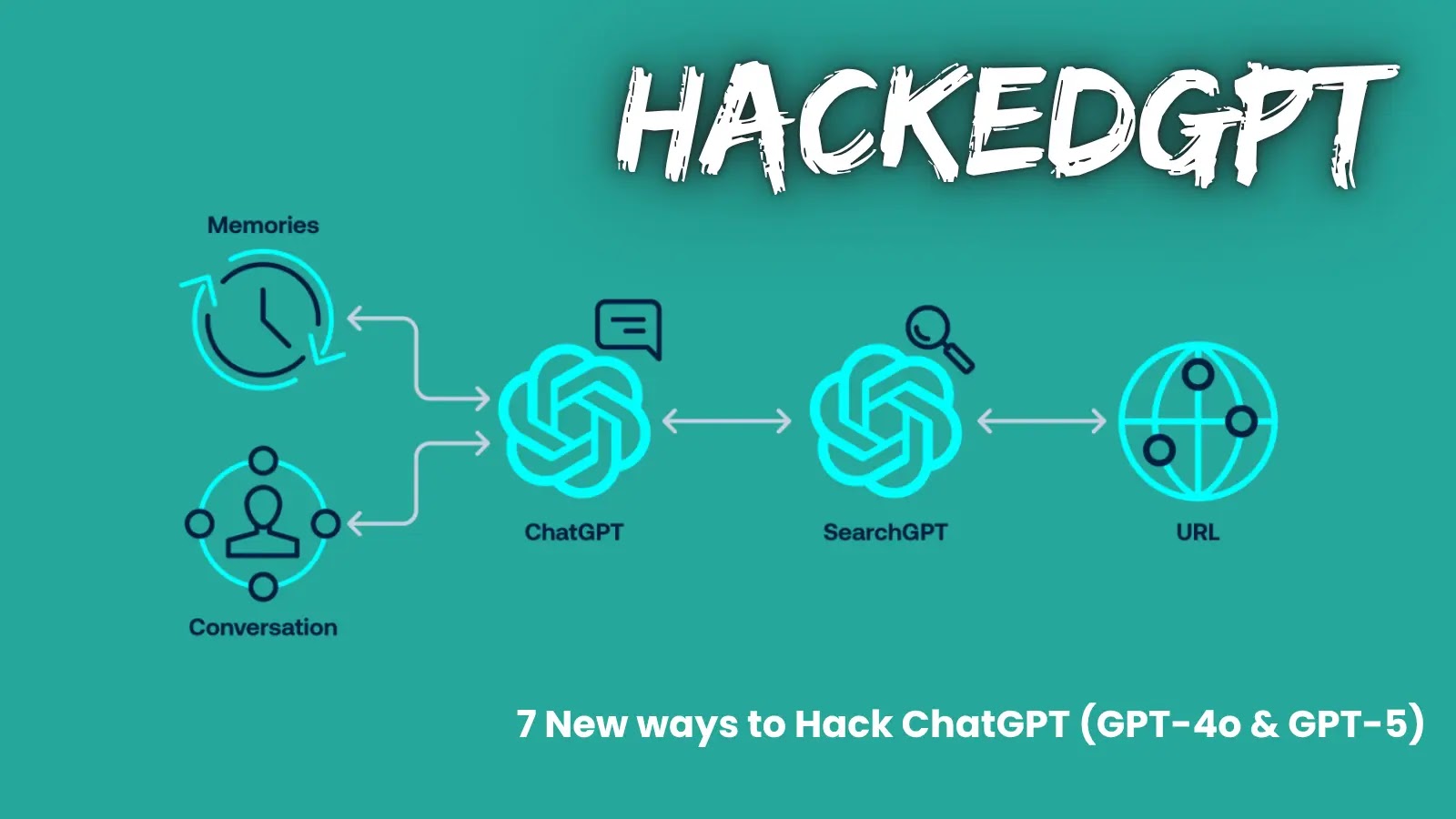

The vulnerabilities stem from ChatGPT’s core structure, which depends on system prompts, reminiscence instruments, and internet searching options to ship contextual responses.

OpenAI’s system immediate outlines the mannequin’s capabilities, together with the “bio” instrument for long-term person reminiscences enabled by default and a “internet” instrument for web entry through search or URL searching.

Reminiscences can retailer non-public particulars deemed essential from previous conversations, whereas the online instrument makes use of a secondary AI, SearchGPT, to isolate searching from person context, theoretically stopping knowledge leaks.

Nonetheless, Tenable researchers discovered that SearchGPT’s isolation is inadequate, permitting immediate injections to propagate again to ChatGPT.

searching through Search GPT

Novel Assault Methods Uncovered

Among the many seven vulnerabilities, a standout is the zero-click oblique immediate injection within the Search Context, the place attackers create listed web sites tailor-made to set off searches on area of interest subjects.

Listed below are brief summaries of all seven ChatGPT vulnerabilities found by Tenable Analysis:

Oblique Immediate Injection through Shopping Context: Attackers cover malicious directions in locations like weblog feedback, which SearchGPT processes and summarizes for customers, compromising them with out suspicion.

Zero-Click on Oblique Immediate Injection in Search Context: Attackers index web sites with malicious prompts that set off mechanically when customers ask harmless questions, resulting in manipulated responses with none person clicks or interplay.

One-Click on Immediate Injection through URL Parameter: Customers clicking on crafted hyperlinks (e.g., chatgpt.com/?q=malicious_prompt) unknowingly trigger ChatGPT to execute attacker-controlled directions.

url_safe Security Mechanism Bypass: Attackers leverage whitelisted Bing.com monitoring hyperlinks to sneak malicious redirect URLs previous OpenAI’s filters and exfiltrate person knowledge, even circumventing built-in protections.

Dialog Injection: Attackers inject directions into SearchGPT’s output that ChatGPT reads and executes from conversational context, successfully prompting itself and enabling chained exploits.

Malicious Content material Hiding: By abusing a markdown rendering flaw, attackers can cover injected malicious prompts from the person’s view whereas maintaining them in mannequin reminiscence for exploitation.

Persistent Reminiscence Injection: Attackers manipulate ChatGPT to replace its persistent reminiscence and embed exfiltration directions so non-public knowledge continues being leaked in future classes or interactions.

Proofs of Idea and OpenAI’s Response

Tenable demonstrated full assault chains, equivalent to phishing through weblog feedback resulting in malicious hyperlinks or picture markdowns that exfiltrate data utilizing url_safe bypasses.

In PoCs for each GPT-4o and GPT-5, attackers phished customers by summarizing rigged blogs or hijacking search outcomes to inject persistent reminiscences that leak knowledge perpetually. These situations underscore how on a regular basis duties like asking for dinner concepts might unwittingly expose private particulars.

Tenable disclosed the problems to OpenAI, leading to fixes for some vulnerabilities through Technical Analysis Advisories (TRAs) like TRA-2025-22, TRA-2025-11, and TRA-2025-06.

Regardless of enhancements, immediate injection stays an inherent LLM problem, with GPT-5 nonetheless weak to a number of PoCs. Specialists urge AI distributors to carefully check security mechanisms, as reliance on remoted parts like SearchGPT proves fragile in opposition to refined chaining.

As LLMs evolve to rival conventional serps, these HackedGPT findings function a wake-up name for customers and enterprises to scrutinize AI dependencies and implement exterior monitoring.

Comply with us on Google Information, LinkedIn, and X for every day cybersecurity updates. Contact us to function your tales.