A Server-Facet Request Forgery (SSRF) vulnerability in OpenAI’s ChatGPT. The flaw, lurking within the Customized GPT “Actions” characteristic, allowed attackers to trick the system into accessing inner cloud metadata, doubtlessly exposing delicate Azure credentials.

The bug, found by Open Safety throughout informal experimentation, highlights the dangers of user-controlled URL dealing with in AI instruments.

SSRF vulnerabilities happen when purposes blindly fetch sources from user-supplied URLs, enabling attackers to coerce servers into querying unintended locations. This will bypass firewalls, probe inner networks, or extract information from privileged providers.

As cloud adoption grows, SSRF’s risks amplify; main suppliers like AWS, Azure, and Google Cloud expose metadata endpoints, equivalent to Azure’s at which include occasion particulars and API tokens.

The Open Net Software Safety Mission (OWASP) added SSRF to its Prime 10 listing in 2021, underscoring its prevalence in trendy apps.

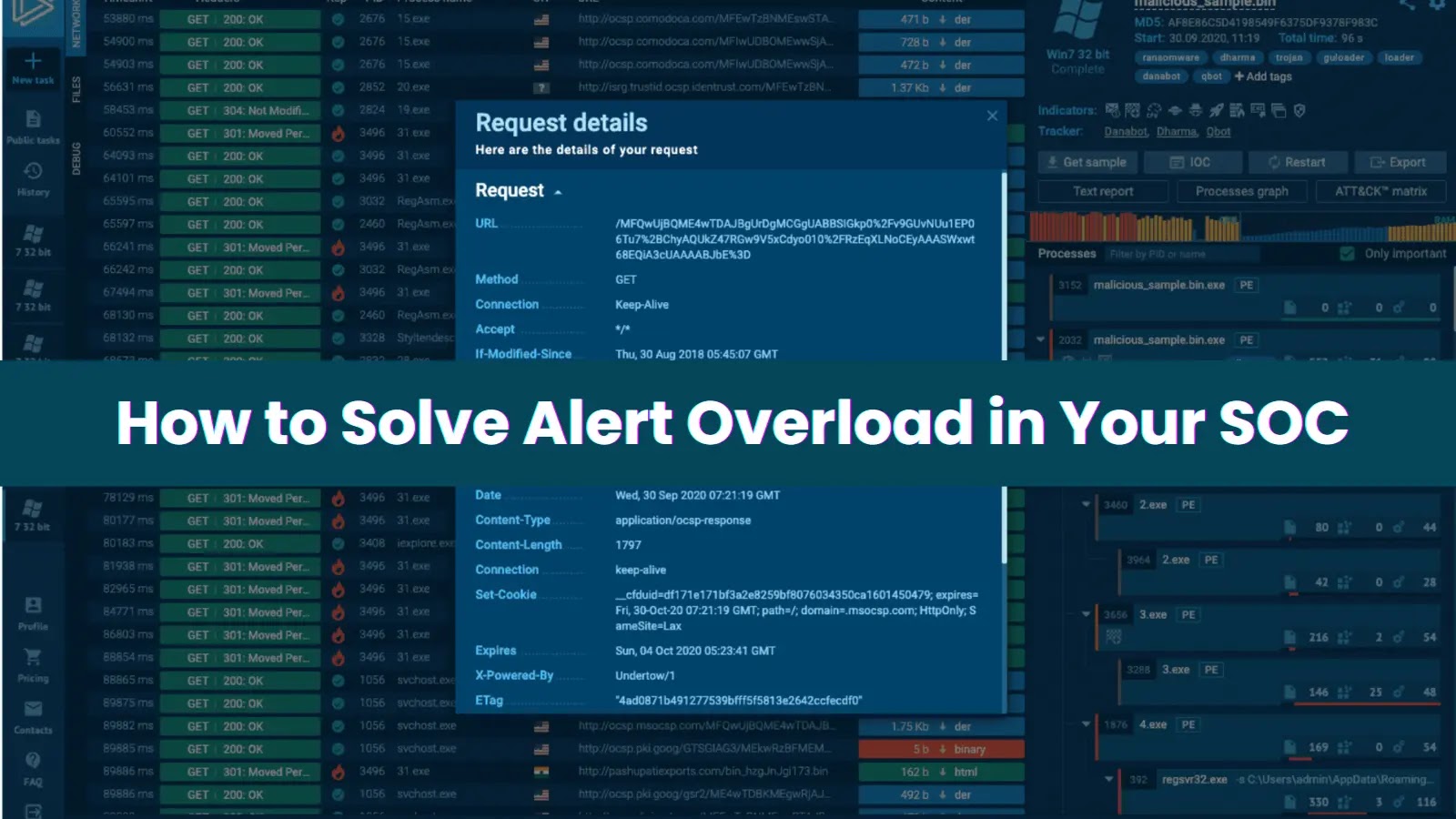

The researcher, experimenting with Customized GPTs, a premium ChatGPT Plus device for constructing tailor-made AI assistants, seen the “Actions” part. This lets customers outline exterior APIs through OpenAPI schemas, permitting the GPT to name them for duties like climate lookups.

The interface features a “Check” button to confirm requests and helps authentication headers. Recognizing the potential for SSRF, the researcher examined by pointing the API URL to Azure’s Occasion Metadata Service (IMDS).

Preliminary makes an attempt failed as a result of the characteristic enforced HTTPS URLs, whereas IMDS makes use of HTTP. Undeterred, the researcher bypassed this utilizing a 302 redirect from an exterior HTTPS endpoint (through instruments like ssrf.cvssadvisor.com) to the inner metadata URL. The server adopted the redirect, however Azure blocked entry with out the “Metadata: true” header.

Additional probing revealed a workaround: the authentication settings allowed customized “API keys.” Naming one “Metadata” with worth “true” injected the required header.

Success! The GPT returned IMDS information, together with an OAuth2 token for Azure’s administration API (requested through /metadata/id/oauth2/token?useful resource=

This token granted direct entry to OpenAI’s cloud surroundings, enabling useful resource enumeration or escalation.

The affect was extreme. In cloud setups, such tokens may pivot to full compromise, as seen in previous Open Safety pentests the place SSRF led to distant code execution throughout tons of of cases.

For ChatGPT, it risked leaking manufacturing secrets and techniques, although the researcher famous it wasn’t essentially the most catastrophic they’d discovered.

Reported promptly to OpenAI’s Bugcrowd program, the vulnerability was assigned excessive severity and obtained a swift patch. OpenAI confirmed the repair, stopping additional exploitation.

Comply with us on Google Information, LinkedIn, and X for day by day cybersecurity updates. Contact us to characteristic your tales.