A brand new and ominous participant has emerged within the quickly increasing panorama of “Shadow AI.” Researchers at Resecurity have recognized DIG AI, an uncensored synthetic intelligence instrument hosted on the darknet that’s empowering risk actors to automate cyberattacks, generate illicit content material, and bypass the protection guardrails of conventional AI fashions.

First detected on September 29, 2025, the instrument has seen a surge in adoption all through This fall, notably through the winter vacation season.

This growth marks a major escalation within the “criminalization of AI,” reducing the barrier to entry for stylish cyberattacks and posing extreme dangers forward of main world occasions in 2026, together with the Winter Olympics in Milan and the FIFA World Cup.

How DIG AI Works

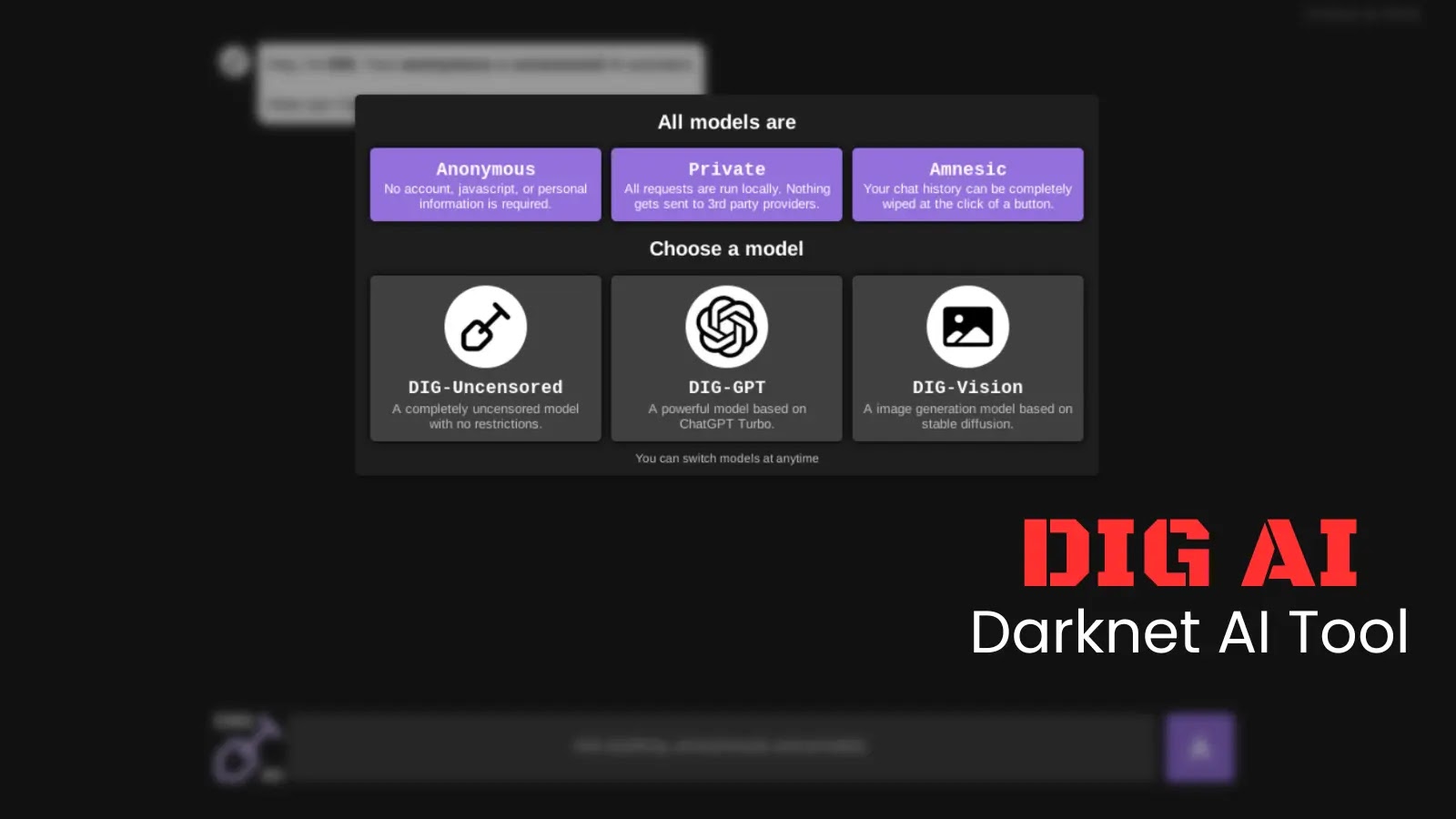

Not like legit platforms that implement strict moral pointers, DIG AI is explicitly designed to have none. Accessible by way of the Tor community, it requires no account registration, guaranteeing full anonymity for its customers. The platform affords a collection of specialised fashions, as revealed in interface screenshots obtained by investigators:

DIG-Uncensored: A very unrestricted mannequin for producing prohibited textual content and code.

DIG-GPT: A robust textual content mannequin reportedly based mostly on a “jailbroken” model of ChatGPT Turbo.

DIG-Imaginative and prescient: A picture technology mannequin based mostly on Steady Diffusion, used for creating deepfakes and illicit imagery.

The instrument’s operator, a risk actor identified by the alias “Pitch,” actively promotes the service on underground marketplaces alongside narcotics and compromised monetary knowledge.

Automating Malicious Code and Exploits

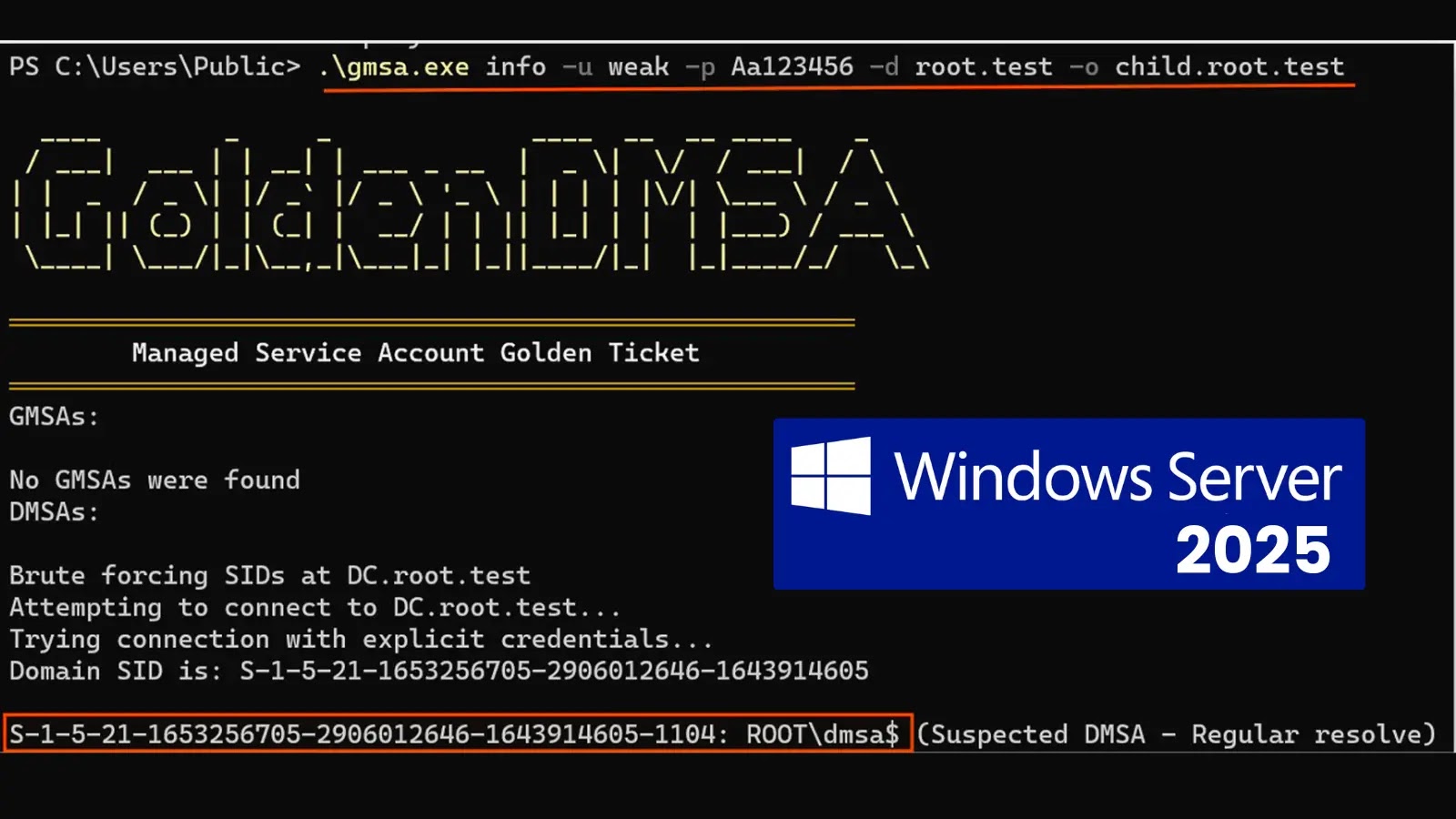

One of the vital alarming capabilities of DIG AI is its capacity to generate useful malicious code. Resecurity analysts efficiently used the instrument to create obfuscated JavaScript backdoors designed to compromise net purposes.

Screenshots of the instrument in motion present it processing requests to “generate and obfuscate malicious script,” producing code designed to be stealthy and onerous to detect.

The generated output acts as an internet shell, permitting attackers to steal consumer knowledge, redirect visitors to phishing websites, or inject additional malware.

FeatureDIG AILegitimate AI (e.g., ChatGPT)AccessDarknet (Tor), No AccountPublic Web, Account RequiredCensorshipNone (Uncensored)Strict Security FiltersPrimary UseMalware, Fraud, CSAMProductivity, Coding, LearningCost ModelFree / Premium for SpeedFree / SubscriptionInfrastructureHidden / Bulletproof HostingCloud Infrastructure

Whereas complicated operations like code obfuscation can take 3–5 minutes as a result of restricted computing assets, the authors supply premium “for-fee” providers to mitigate these delays, successfully making a “Crime-as-a-Service” mannequin for AI.

Past cybercrime, DIG AI is being weaponized to trigger extreme real-world hurt. The instrument has been noticed producing detailed directions for manufacturing explosives and prohibited medicine.

Most critically, the “DIG-Imaginative and prescient” mannequin facilitates the creation of Baby Sexual Abuse Materials (CSAM). Resecurity confirmed the instrument can generate hyper-realistic artificial photographs or manipulate actual pictures of minors, making a nightmare state of affairs for baby security advocates and regulation enforcement.

“This subject will current a brand new problem for legislators,” be aware Resecurity analysts. “Offenders can run fashions on their very own infrastructure… producing limitless unlawful content material that on-line platforms can’t detect”.

DIG AI represents the most recent evolution in “Not Good AI” instruments sometimes called “Darkish LLMs” or jailbroken chatbots. Following within the footsteps of predecessors like FraudGPT and WormGPT, these instruments are seeing explosive progress, with mentions of malicious AI on cybercriminal boards growing by over 200% between 2024 and 2025.

As 2026 approaches, the cybersecurity neighborhood faces a “fifth area of warfare.” With unhealthy actors able to automating assaults and producing infinite variations of malicious content material, the struggle towards weaponized AI is not a future prediction; it’s an pressing current actuality.

Observe us on Google Information, LinkedIn, and X for each day cybersecurity updates. Contact us to function your tales.