As synthetic intelligence methods turn out to be extra autonomous, their means to work together with digital instruments and knowledge introduces advanced new dangers.

Recognizing this problem, researchers from NVIDIA and Lakera AI have collaborated on a brand new paper proposing a unified framework for the protection and safety of those superior “agentic” methods.

The proposal addresses the shortcomings of conventional safety fashions in managing the novel threats posed by AI brokers that may take actions in the true world.

The core of the proposed framework strikes past viewing security as a static function of a mannequin.

As a substitute, it treats security and safety as interconnected properties that emerge from the dynamic interactions between AI fashions, their orchestration, the instruments they use, and the information they entry.

This holistic method is designed to determine and handle dangers throughout your complete lifecycle of an agentic system, from improvement to deployment.

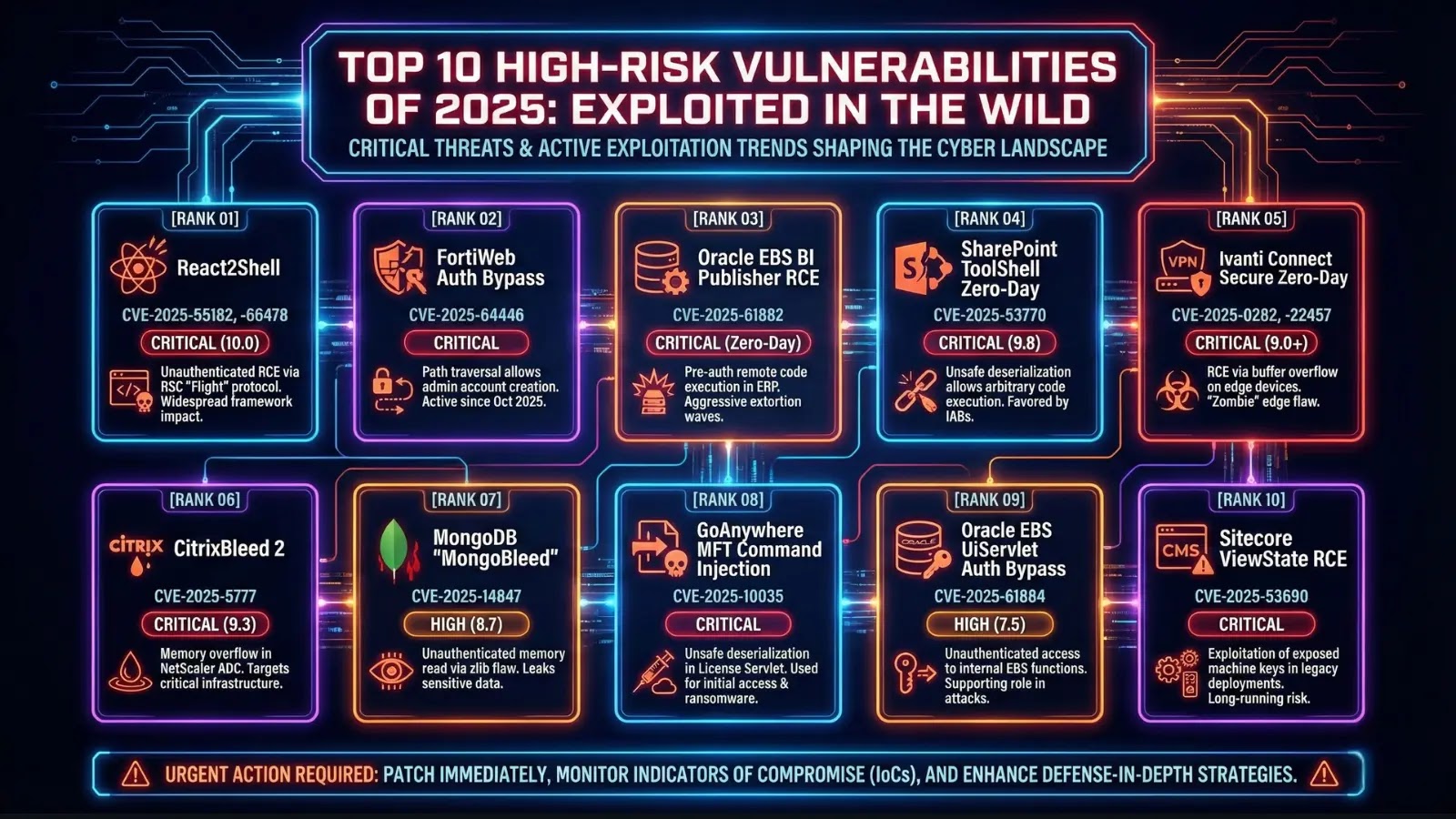

Agentic System Security and Safety Framework (Supply – Arxiv)

Arxiv safety researchers famous that typical safety evaluation instruments, such because the Widespread Vulnerability Scoring System (CVSS), are inadequate for addressing the distinctive dangers in agentic AI.

A minor safety flaw on the part stage, they recognized, might cascade into important, system-wide consumer hurt.

This new mannequin introduces a extra complete technique for evaluating these advanced methods, as illustrated within the framework’s architectural diagram.

It offers a structured method to understanding how localized hazards can compound and result in surprising, large-scale failures.

The framework is designed to be operational for enterprise-grade workflows, making certain that as brokers turn out to be extra built-in into enterprise processes, their actions stay aligned with security and safety insurance policies.

AI-Pushed Threat Discovery

The paper delves deeper into the essential section of threat discovery, which depends on an modern AI-driven pink teaming course of. Inside a sandboxed atmosphere, specialised “evaluator” AI brokers are used to probe the first agentic system for weaknesses.

These probes simulate varied assault eventualities, from immediate injections to classy makes an attempt at device misuse, to uncover potential vulnerabilities earlier than they are often exploited.

This automated analysis permits builders to determine and mitigate novel agentic dangers, reminiscent of unintended management amplification or cascading motion chains, in a managed setting.

To assist the development of this subject, the researchers have additionally launched a complete dataset, the Nemotron-AIQ Agentic Security Dataset 1.0. It accommodates over 10,000 detailed traces of agent behaviors throughout assault and protection simulations.

This useful resource gives the broader group a invaluable device for learning and creating extra sturdy security measures for the subsequent era of agentic AI. The continued analysis guarantees to supply evolving insights into the operational conduct of those advanced methods.

Observe us on Google Information, LinkedIn, and X to Get Extra Instantaneous Updates, Set CSN as a Most well-liked Supply in Google.