A groundbreaking open-source benchmark suite referred to as CyberSOCEval has emerged as the primary complete analysis framework for Massive Language Fashions (LLMs) in Safety Operations Heart (SOC) environments.

Launched as a part of CyberSecEval 4, this modern benchmark addresses crucial gaps in cybersecurity AI analysis by specializing in two important defensive domains: Malware Evaluation and Risk Intelligence Reasoning.

The analysis, carried out by Meta and CrowdStrike, reveals that present AI methods are removed from saturating these security-focused evaluations, with accuracy scores starting from roughly 15% to twenty-eight% on malware evaluation duties and 43% to 53% on risk intelligence reasoning.

Key Takeaways1. CyberSOCEval, the primary open-source benchmark testing LLMs on Safety Operations Heart duties.2. Present LLMs obtain solely 15-28% accuracy on malware evaluation and 43-53% on risk intelligence.3. 609 malware questions and 588 risk intelligence questions consider AI methods on JSON logs, MITRE ATT&CK mappings, and sophisticated assault chains.

These outcomes spotlight vital alternatives for enchancment in AI cyber protection capabilities.

CyberSOCEval Malware Evaluation

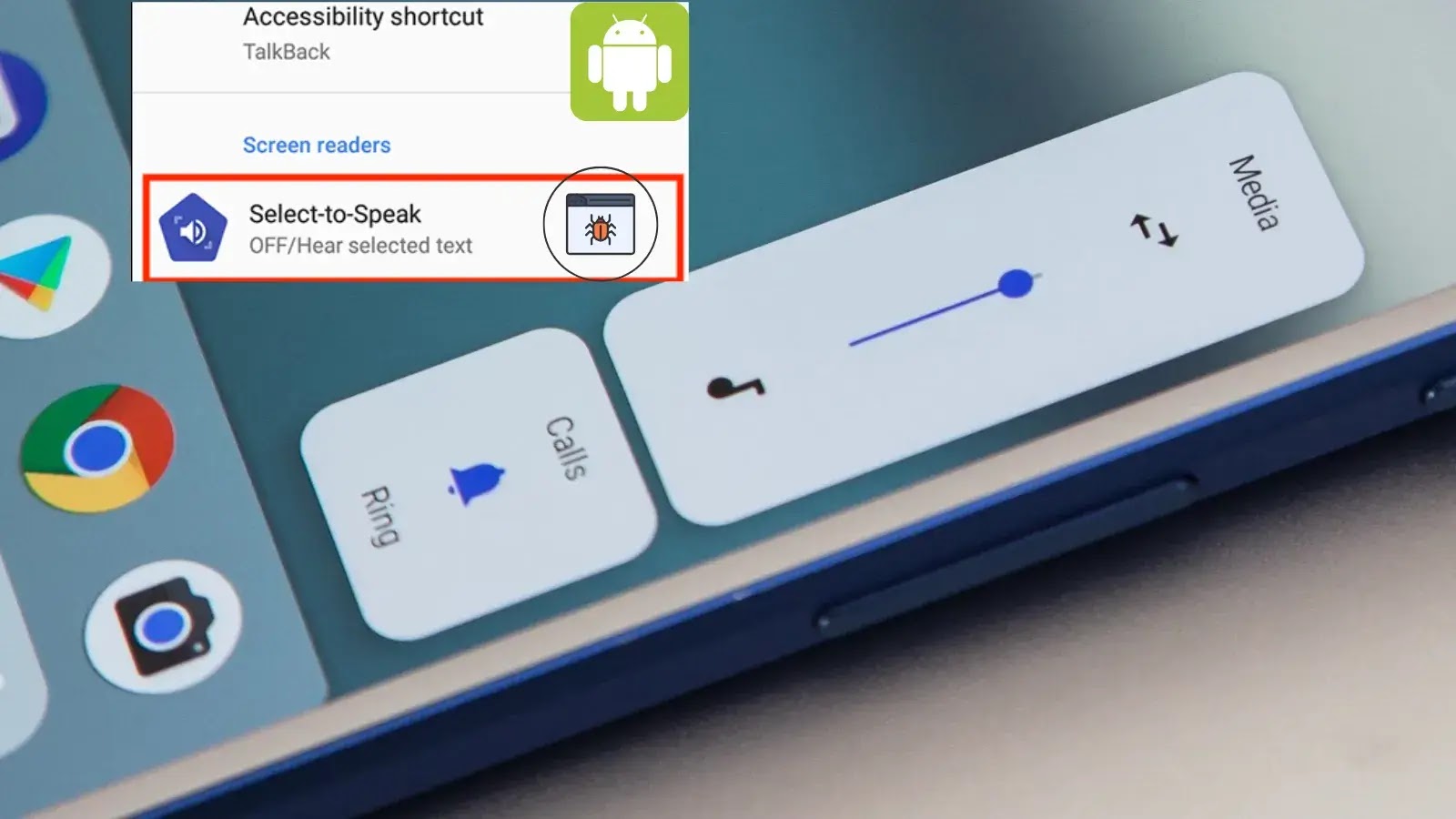

CyberSOCEval’s Malware Evaluation part leverages actual sandbox detonation information from CrowdStrike Falcon® Sandbox, creating 609 question-answer pairs throughout 5 malware classes, together with ransomware, Distant Entry Trojans (RATs), infostealers, EDR/AV killers, and UM unhooking strategies.

The benchmark evaluates AI methods’ skill to interpret advanced JSON-formatted system logs, course of timber, community site visitors, and MITRE ATT&CK framework mappings.

Technical specs embody assist for fashions with as much as 128,000 token context home windows, with filtering mechanisms that cut back report dimension whereas sustaining efficiency integrity.

The analysis covers crucial cybersecurity ideas, together with T1055.001 (Course of Injection), T1112 (Registry Run Keys), and API calls like CreateRemoteThread, VirtualAlloc, and WriteProcessMemory.

The Risk Intelligence Reasoning benchmark processes 588 question-answer pairs derived from 45 distinct risk intelligence studies sourced from CrowdStrike, CISA, NSA, and IC3.

Not like present frameworks comparable to CTIBench and SEvenLLM, CyberSOCEval incorporates multimodal intelligence studies combining textual indicators of compromise (IOCs) with tables and diagrams.

The analysis methodology employs each category-based and relationship-based query era utilizing Llama 3.2 90B and Llama 4 Maverick fashions.

Detonation report distribution by malware assault & Distribution by subject and problem

Questions require multi-hop reasoning throughout risk actor relationships, malware attribution, and sophisticated assault chain evaluation mapped to frameworks like MITRE ATT&CK.

Reasoning fashions leveraging test-time scaling didn’t display the efficiency enhancements noticed in coding and arithmetic domains, suggesting cybersecurity-specific reasoning coaching represents a key improvement alternative, Meta stated.

The benchmark’s open-source nature encourages neighborhood contributions and offers practitioners with dependable mannequin choice metrics whereas providing AI builders a transparent improvement roadmap for enhancing cyber protection capabilities.

Free dwell webinar on new malware techniques from our analysts! Be taught superior detection strategies -> Register for Free