Synthetic Intelligence, particularly its agentic AI implementation, is turning into a mainstay of enterprise – and is attracting new and totally different assaults that have to be defended.

aiFWall Inc emerged from stealth on January 21, 2026. It’s not but the complete launch of the corporate, however CEO and founder Vimal Vaidya has determined to make the essential product accessible at no cost. That product, aiFWall, is a firewall safety for AI deployments constructed to make use of AI to enhance its personal efficiency. A elementary function of the product is that it’s two-way – it filters inputs to the AI which may hurt it, and it filters outputs from the AI that will include toxicity or bias.

Merchandise to safe AI exist already, however in response to Vaidya they have an inclination to have elementary weaknesses. One is they don’t seem to be contextual. Whereas in-house AI deployments are very a lot contextual, most safety options usually are not – they solely have a look at the present actual time information. Such techniques could look at a brand new person enter and resolve on the spot whether or not it’s legitimate or invalid and permit or block it on that proof. However what could seem invalid now could possibly be legitimate within the context of a person interplay six months in the past. “For that, you want to perceive how the person has behaved earlier to know the present intent,” says Vaidya.

A second, he asserts, is a failure to ship just-in-time safety because it occurs. To counter this, aiFWall (with the client’s and customers’ consent) collects the prompts, together with malicious prompts, and feeds them into its personal central AI engine. This produces ‘risk markers’ that are then distributed and instantly made accessible to each deployed aiFWall. It ought to be stated that this interplay with the central intelligence shouldn’t be a part of the free model however is a part of a paid subscription to the complete model that can be accessible after the official launch of the corporate and product.

A 3rd is that this AI firewall is self-learning on AI viruses. It operates like mainstream community firewalls in reporting a brand new virus again to the developer, who then distributes recognition to all different installations. aiFWall does this for the rising incidence of viruses particularly designed to assault company AI installations.

Simply because the in-house use of agentic AI techniques is new, so are viruses particularly designed to focus on it additionally new. However they exist already and the quantity is rising. PromptLock was the primary, found in August 2025. In November, Google described its discovery of a number of others that harness the goal’s personal agentic AI techniques. Many had been experimental, however some had been additionally seen within the wild. Commercial. Scroll to proceed studying.

Additionally in November, Anthropic printed particulars of a complicated assault it first found in September. “The risk actor – whom we assess with excessive confidence was a Chinese language state-sponsored group – manipulated our Claude Code software into trying infiltration into roughly thirty international targets and succeeded in a small variety of circumstances.”

Most of those viruses are early makes an attempt from attackers – they’re used as studying instruments. However there’s little doubt that they are going to improve in amount and high quality.

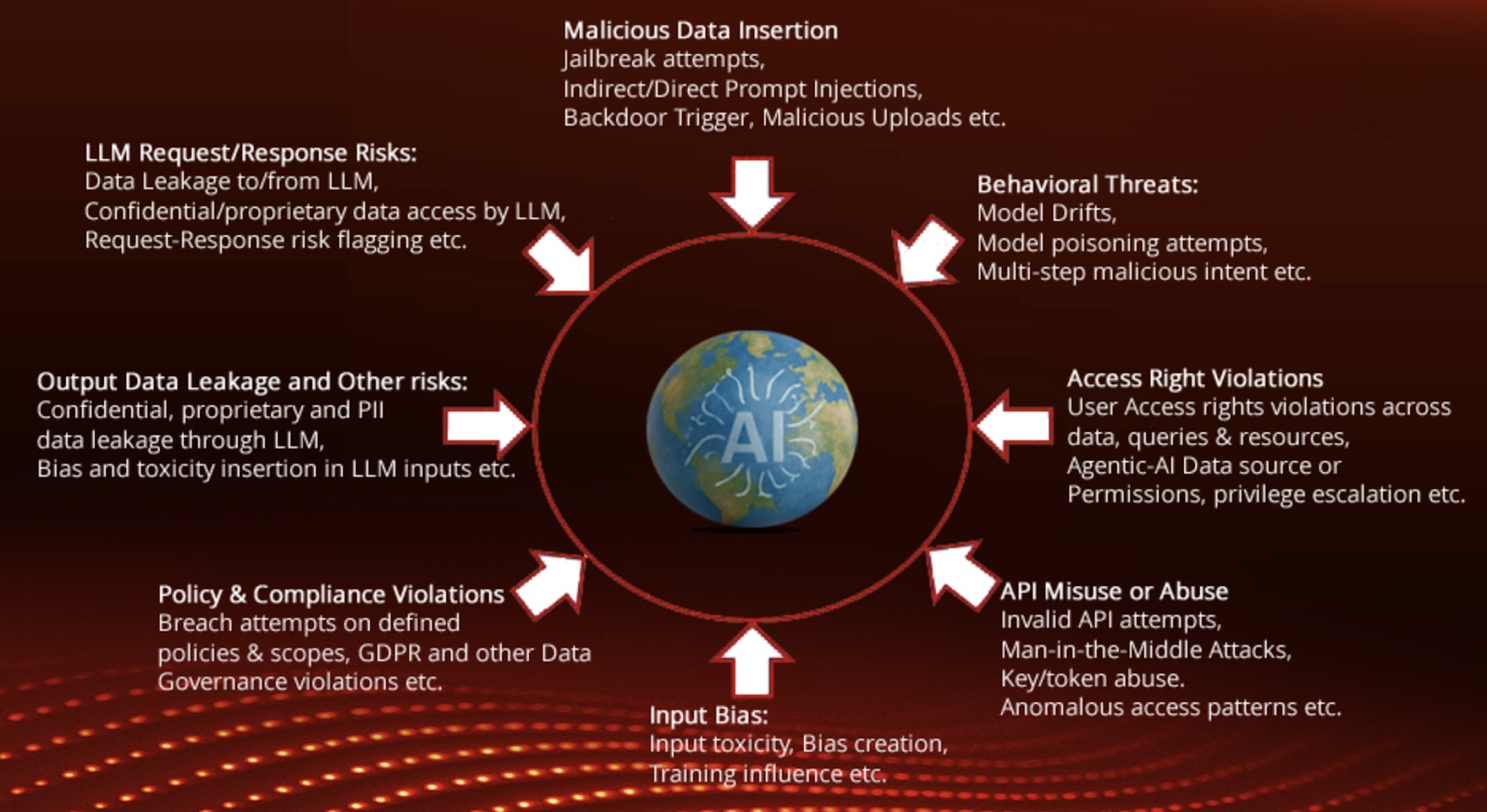

The large distinction between aiFWall and mainstream firewalls is that it is a two approach round protection across the AI. It stops inbound assaults (comparable to malicious information poisoning prompts, viral assaults, assaults towards the LLM API, denial of service assaults), and filters any outbound toxicity, bias or compliance points (exposures contravening, for instance, GDPR, CCPA, HIPAA, and PCI) within the AI’s responses.

It doesn’t change the community firewall however protects agentic AI from threats throughout the community firewall. Stolen credentials have lengthy been a main assault software. If the attacker has right credentials, that attacker can usually penetrate the community firewall, however will nonetheless be stopped from getting access to the agentic system by the aiFWall.

“Stolen credentials,” explains Vaidya, “may beat a community firewall and do unhealthy issues, because the person is validated by the credentials; however would possible fail on the AI firewall because the system has discovered what the actual person is prone to do, and discovered what unhealthy issues seem like.”

Vaidya doesn’t declare that aiFWall will forestall all assaults all over the place, each time. “No vendor would say that they’re 100% in a position to defend towards any unhealthy code. However we will most likely do a greater job than lots of the options which are presently on the market, due to the depth of the evaluation that we offer, the context, and the tailor-making of markers to the present risk and the present deployment of AI. So, we really feel that we’re forward of the sport. However once more, there is no such thing as a 100% safety for unhealthy code, as everybody already is aware of.”

Study Extra on the AI Threat Summit at Half Moon Bay

Associated: Rethinking Safety for Agentic AI

Associated: Google Fortifies Chrome Agentic AI In opposition to Oblique Immediate Injection Assaults

Associated: Microsoft Highlights Safety Dangers Launched by Agentic AI Function

Associated: Comply with Pragmatic Interventions to Maintain Agentic AI in Examine