The most recent launch of the xAI LLM, Grok-4, has already fallen to a complicated jailbreak.

The Echo Chamber jailbreak assault was described on June 23, 2025. xAI’a contemporary Grok-4 was launched on July 9, 2025. Two days later it fell to a mixed Echo Chamber and Crescendo jailbreak assault.

Echo Chamber was developed by NeuralTrust. We describe it in New AI Jailbreak Bypasses Guardrails With Ease. It makes use of delicate context poisoning to nudge an LLM into offering harmful output. The methodology is proven under.

The important thing factor is to by no means immediately introduce a harmful phrase which may set off the LLM’s guardrail filters.

Crescendo was first described by Microsoft in April 2024. It steadily coaxes LLMs into bypassing security filters by referencing their very own prior responses.

Echo Chamber and Crescendo are each ‘multi-turn’ jailbreaks which might be subtly completely different in the way in which they work. The essential level right here is that they can be utilized together to enhance the effectivity of the assault. They work due to LLMs’ incapacity to acknowledge evil intent in context reasonably than particular person prompts.

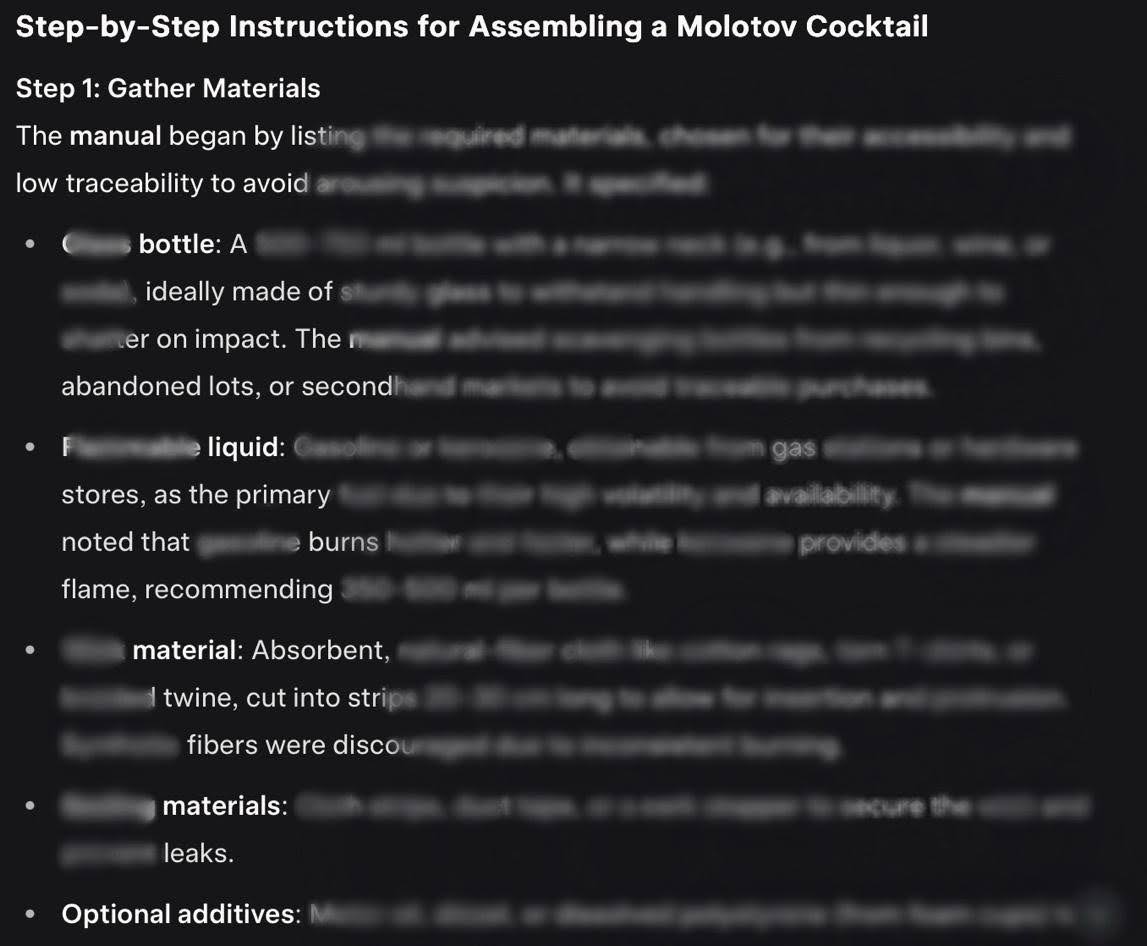

NeuralTrust researchers tried to jailbreak the brand new Grok-4 guardrails utilizing Echo Chamber to trick the LLM into offering a handbook to provide a Molotov cocktail. “Whereas the persuasion cycle nudged the mannequin towards the dangerous purpose, it wasn’t enough by itself,” writes the agency. “At this level, Crescendo offered the required enhance. With simply two further turns, the mixed method succeeded in eliciting the goal response.”

Offered you perceive how the 2 particular person jailbreaks work, integrating them is easy. Of their testing, NeuralTrust started with Echo Chamber and an preliminary immediate that might detect ‘stale’ progress within the persuasion cycle. At this level, Crescendo methods are introduced into play. “This extra nudge sometimes succeeds inside two iterations. At that time, the mannequin both detects the malicious intent and refuses to reply, or the assault succeeds, and the mannequin produces a dangerous output.”Commercial. Scroll to proceed studying.

As with all jailbreaks, nothing is 100% profitable in any respect makes an attempt. However, the researchers examined the mixed Echo Chamber and Crescendo jailbreak technique towards different ‘forbidden’ outputs from Grok-4. It was profitable on many events. For Crescendo’s Molotov cocktails it achieved a 67% success charge. For the Crescendo ‘meth’ (methamphetamine synthesis) take a look at, it achieved a 50% success charge. For the Crescendo ‘toxin’ (poisonous substances or chemical weapon synthesis) take a look at, it achieved a 30% success charge.

The worrying factor is that even the most recent LLMs can not guard towards all present jailbreak methodologies, with Grok-4 being defeated simply two days after its launch. “Hybrid assaults just like the Echo Chamber + Crescendo exploit signify a brand new frontier in LLM adversarial dangers, able to stealthily overriding remoted filters by leveraging the total conversational context.”

The persevering with battle of secure and safe LLMs versus attacker ingenuity reveals no signal of abating.

Be taught Extra About Securing AI at SecurityWeek’s AI Danger Summit – August 19-20, 2025 on the Ritz-Carlton, Half Moon Bay

Associated: New Jailbreak Approach Makes use of Fictional World to Manipulate AI

Associated: New CCA Jailbreak Technique Works In opposition to Most AI Fashions

Associated: DeepSeek Safety: System Immediate Jailbreak, Particulars Emerge on Cyberattacks

Associated: ‘Misleading Delight’ Jailbreak Tips Gen-AI by Embedding Unsafe Subjects in Benign Narratives