Ravie LakshmananJan 21, 2026Vulnerability / Synthetic Intelligence

Safety vulnerabilities have been uncovered within the in style open-source synthetic intelligence (AI) framework Chainlit that would enable attackers to steal delicate information, which can enable for lateral motion inside a vulnerable group.

Zafran Safety mentioned the high-severity flaws, collectively dubbed ChainLeak, may very well be abused to leak cloud setting API keys and steal delicate information, or carry out server-side request forgery (SSRF) assaults in opposition to servers internet hosting AI functions.

Chainlit is a framework for creating conversational chatbots. Based on statistics shared by the Python Software program Basis, the package deal has been downloaded over 220,000 occasions over the previous week. It has attracted a complete of seven.3 million downloads so far.

Particulars of the 2 vulnerabilities are as follows –

CVE-2026-22218 (CVSS rating: 7.1) – An arbitrary file learn vulnerability within the “/venture/component” replace circulate that permits an authenticated attacker to entry the contents of any file readable by the service into their very own session because of a scarcity of validation of user-controller fields

CVE-2026-22219 (CVSS rating: 8.3) – An SSRF vulnerability within the “/venture/component” replace circulate when configured with the SQLAlchemy information layer backend that permits an attacker to make arbitrary HTTP requests to inner community companies or cloud metadata endpoints from the Chainlit server and retailer the retrieved responses

“The 2 Chainlit vulnerabilities will be mixed in a number of methods to leak delicate information, escalate privileges, and transfer laterally inside the system,” Zafran researchers Gal Zaban and Ido Shani mentioned. “As soon as an attacker positive factors arbitrary file learn entry on the server, the AI utility’s safety shortly begins to break down. What initially seems to be a contained flaw turns into direct entry to the system’s most delicate secrets and techniques and inner state.”

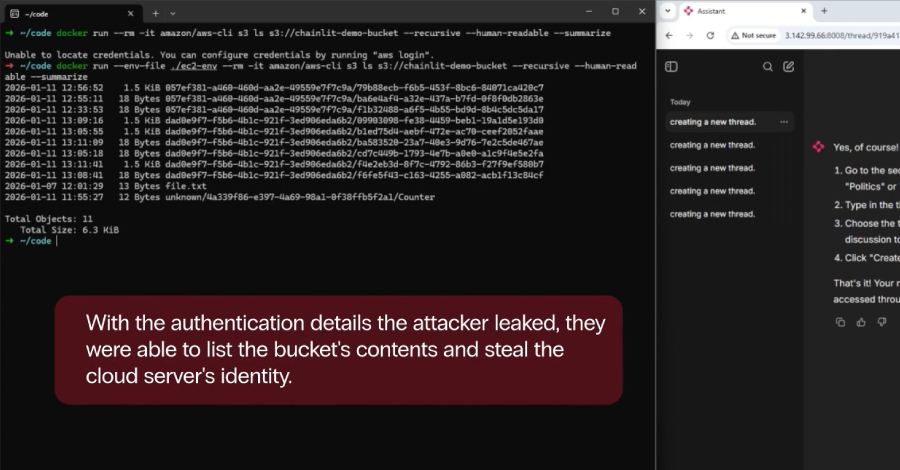

As an illustration, an attacker can weaponize CVE-2026-22218 to learn “/proc/self/environ,” permitting them to glean helpful data reminiscent of API keys, credentials, and inner file paths that may very well be used to burrow deeper into the compromised community and even achieve entry to the appliance supply code. Alternatively, it may be used to leak database information if the setup makes use of SQLAlchemy with an SQLite backend as its information layer.

Following accountable disclosure on November 23, 2025, each vulnerabilities have been addressed by Chainlit in model 2.9.4 launched on December 24, 2025.

“As organizations quickly undertake AI frameworks and third-party parts, long-standing lessons of software program vulnerabilities are being embedded instantly into AI infrastructure,” Zafran mentioned. “These frameworks introduce new and sometimes poorly understood assault surfaces, the place well-known vulnerability lessons can instantly compromise AI-powered programs.”

Flaw in Microsoft MarkItDown MCP Server

The disclosure comes as BlueRock disclosed a vulnerability in Microsoft’s MarkItDown Mannequin Context Protocol (MCP) server dubbed MCP fURI that permits arbitrary calling of URI assets, exposing organizations to privilege escalation, SSRF, and information leakage assaults. The shortcoming impacts the server when operating in an Amazon Internet Companies (AWS) EC2 occasion utilizing IDMSv1.

“This vulnerability permits an attacker to execute the Markitdown MCP device convert_to_markdown to name an arbitrary uniform useful resource identifier (URI),” BlueRock mentioned. “The shortage of any boundaries on the URI permits any consumer, agent, or attacker calling the device to entry any HTTP or file useful resource.”

“When offering a URI to the Markitdown MCP server, this can be utilized to question the occasion metadata of the server. A consumer can then get hold of credentials to the occasion if there’s a position related, supplying you with entry to the AWS account, together with the entry and secret keys.”

The agentic AI safety firm mentioned its evaluation of greater than 7,000 MCP servers discovered that over 36.7% of them are doubtless uncovered to comparable SSRF vulnerabilities. To mitigate the danger posed by the problem, it is suggested to make use of IMDSv2 to safe in opposition to SSRF assaults, implement non-public IP blocking, prohibit entry to metadata companies, and create an allowlist to forestall information exfiltration.