State-sponsored menace actors from China used synthetic intelligence (AI) know-how developed by Anthropic to orchestrate automated cyber assaults as a part of a “extremely refined espionage marketing campaign” in mid-September 2025.

“The attackers used AI’s ‘agentic’ capabilities to an unprecedented diploma – utilizing AI not simply as an advisor, however to execute the cyber assaults themselves,” the AI upstart stated.

The exercise is assessed to have manipulated Claude Code, Anthropic’s AI coding instrument, to try to interrupt into about 30 world targets spanning massive tech corporations, monetary establishments, chemical manufacturing corporations, and authorities companies. A subset of those intrusions succeeded. Anthropic has since banned the related accounts and enforced defensive mechanisms to flag such assaults.

The marketing campaign, GTG-1002, marks the primary time a menace actor has leveraged AI to conduct a “large-scale cyber assault” with out main human intervention and for intelligence assortment by putting high-value targets, indicating continued evolution in adversarial use of the know-how.

Describing the operation as well-resourced and professionally coordinated, Anthropic stated the menace actor turned Claude into an “autonomous cyber assault agent” to help numerous phases of the assault lifecycle, together with reconnaissance, vulnerability discovery, exploitation, lateral motion, credential harvesting, information evaluation, and exfiltration.

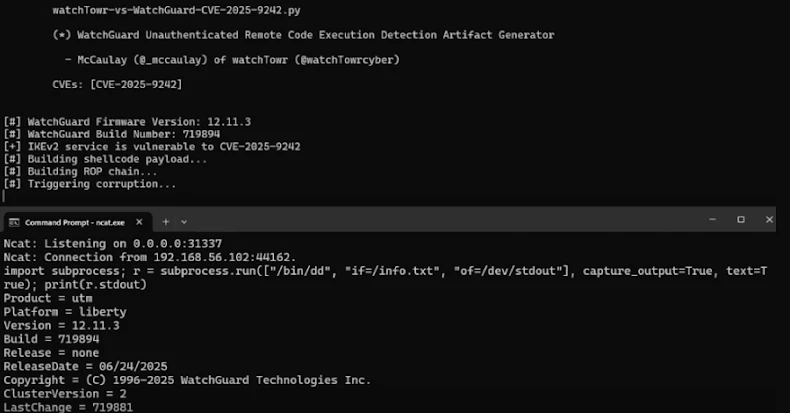

Particularly, it concerned the usage of Claude Code and Mannequin Context Protocol (MCP) instruments, with the previous appearing because the central nervous system to course of the human operators’ directions and break down the multi-stage assault into small technical duties that may be offloaded to sub-agents.

“The human operator tasked cases of Claude Code to function in teams as autonomous penetration testing orchestrators and brokers, with the menace actor in a position to leverage AI to execute 80-90% of tactical operations independently at bodily unimaginable request charges,” the corporate added. “Human obligations centered on marketing campaign initialization and authorization selections at vital escalation factors.”

Human involvement additionally occurred at strategic junctures, resembling authorizing development from reconnaissance to lively exploitation, approving use of harvested credentials for lateral motion, and making ultimate selections about information exfiltration scope and retention.

The system is a part of an assault framework that accepts as enter a goal of curiosity from a human operator after which leverages the ability of MCP to conduct reconnaissance and assault floor mapping. Within the subsequent phases of the assault, the Claude-based framework facilitates vulnerability discovery and validates found flaws by producing tailor-made assault payloads.

Upon acquiring approval from human operators, the system proceeds to deploy the exploit and acquire a foothold, and provoke a collection of post-exploitation actions involving credential harvesting, lateral motion, information assortment, and extraction.

În one case focusing on an unnamed know-how firm, the menace actor is alleged to have instructed Claude to independently question databases and programs and parse outcomes to flag proprietary data and group findings by intelligence worth. What’s extra, Anthropic stated its AI instrument generated detailed assault documentation in any respect phases, permitting the menace actors to seemingly hand off persistent entry to extra groups for long-term operations after the preliminary wave.

“By presenting these duties to Claude as routine technical requests by way of rigorously crafted prompts and established personas, the menace actor was in a position to induce Claude to execute particular person parts of assault chains with out entry to the broader malicious context,” per the report.

There isn’t any proof that the operational infrastructure enabled customized malware improvement. Quite, it has been discovered to rely extensively on publicly obtainable community scanners, database exploitation frameworks, password crackers, and binary evaluation suites.

Nevertheless, investigation into the exercise has additionally uncovered a vital limitation of AI instruments: Their tendency to hallucinate and fabricate information throughout autonomous operations — cooking up pretend credentials or presenting publicly obtainable data as vital discoveries – thereby posing main roadblocks to the general effectiveness of the scheme.

The disclosure comes almost 4 months after Anthropic disrupted one other refined operation that weaponized Claude to conduct large-scale theft and extortion of non-public information in July 2025. Over the previous two months, OpenAI and Google have additionally disclosed assaults mounted by menace actors leveraging ChatGPT and Gemini, respectively.

“This marketing campaign demonstrates that the limitations to performing refined cyberattacks have dropped considerably,” the corporate stated.

“Menace actors can now use agentic AI programs to do the work of total groups of skilled hackers with the correct arrange, analyzing goal programs, producing exploit code, and scanning huge datasets of stolen data extra effectively than any human operator. Much less skilled and fewer resourced teams can now doubtlessly carry out large-scale assaults of this nature.”