Jan 13, 2026The Hacker NewsArtificial Intelligence / Automation Safety

AI brokers are now not simply writing code. They’re executing it.

Instruments like Copilot, Claude Code, and Codex can now construct, check, and deploy software program end-to-end in minutes. That velocity is reshaping engineering—however it’s additionally making a safety hole most groups do not see till one thing breaks.

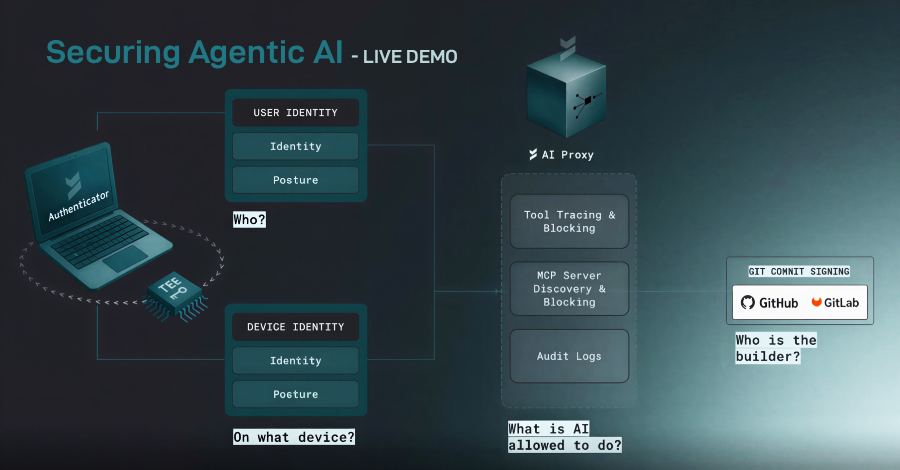

Behind each agentic workflow sits a layer few organizations are actively securing: Machine Management Protocols (MCPs). These methods quietly resolve what an AI agent can run, which instruments it may possibly name, which APIs it may possibly entry, and what infrastructure it may possibly contact. As soon as that management aircraft is compromised or misconfigured, the agent does not simply make errors—it acts with authority.

Ask the groups impacted by CVE-2025-6514. One flaw turned a trusted OAuth proxy utilized by greater than 500,000 builders right into a distant code execution path. No unique exploit chain. No noisy breach. Simply automation doing precisely what it was allowed to do—at scale. That incident made one factor clear: if an AI agent can execute instructions, it may possibly additionally execute assaults.

This webinar is for groups who wish to transfer quick with out giving up management.

Safe your spot for the reside session ➜

Led by the writer of the OpenID whitepaper Id Administration for Agentic AI, this session goes straight to the core dangers safety groups are actually inheriting from agentic AI adoption. You will see how MCP servers truly work in actual environments, the place shadow API keys seem, how permissions quietly sprawl, and why conventional id and entry fashions break down when brokers act in your behalf.

You will be taught:

What MCP servers are and why they matter greater than the mannequin itself

How malicious or compromised MCPs flip automation into an assault floor

The place shadow API keys come from—and easy methods to detect and get rid of them

The way to audit agent actions and implement coverage earlier than deployment

Sensible controls to safe agentic AI with out slowing growth

Agentic AI is already inside your pipeline. The one query is whether or not you may see what it is doing—and cease it when it goes too far.

Register for the reside webinar and regain management of your AI stack earlier than the subsequent incident does it for you.

Register for the Webinar ➜

Discovered this text fascinating? This text is a contributed piece from one in all our valued companions. Observe us on Google Information, Twitter and LinkedIn to learn extra unique content material we publish.