Oct 31, 2025Ravie LakshmananArtificial Intelligence / Code Safety

OpenAI has introduced the launch of an “agentic safety researcher” that is powered by its GPT-5 massive language mannequin (LLM) and is programmed to emulate a human professional able to scanning, understanding, and patching code.

Known as Aardvark, the bogus intelligence (AI) firm mentioned the autonomous agent is designed to assist builders and safety groups flag and repair safety vulnerabilities at scale. It is at the moment accessible in non-public beta.

“Aardvark repeatedly analyzes supply code repositories to determine vulnerabilities, assess exploitability, prioritize severity, and suggest focused patches,” OpenAI famous.

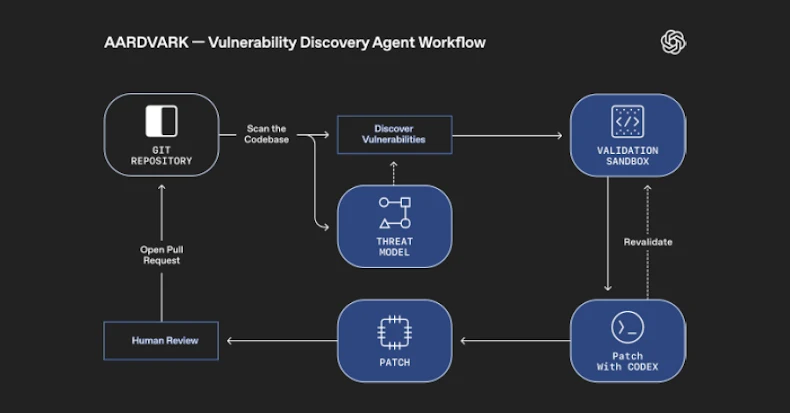

It really works by embedding itself into the software program improvement pipeline, monitoring commits and modifications to codebases, detecting safety points and the way they is perhaps exploited, and proposing fixes to deal with them utilizing LLM-based reasoning and tool-use.

Powering the agent is GPT‑5, which OpenAI launched in August 2025. The corporate describes it as a “sensible, environment friendly mannequin” that options deeper reasoning capabilities, courtesy of GPT‑5 pondering, and a “actual‑time router” to determine the best mannequin to make use of based mostly on dialog kind, complexity, and person intent.

Aardvark, OpenAI added, analyses a venture’s codebase to supply a risk mannequin that it thinks finest represents its safety targets and design. With this contextual basis, the agent then scans its historical past to determine present points, in addition to detect new ones by scrutinizing incoming modifications to the repository.

As soon as a possible safety defect is discovered, it makes an attempt to set off it in an remoted, sandboxed surroundings to substantiate its exploitability and leverages OpenAI Codex, its coding agent, to supply a patch that may be reviewed by a human analyst.

OpenAI mentioned it has been operating the agent throughout OpenAI’s inside codebases and a few of its exterior alpha companions, and that it has helped determine a minimum of 10 CVEs in open-source initiatives.

The AI upstart is much from the one firm to trial AI brokers to sort out automated vulnerability discovery and patching. Earlier this month, Google introduced CodeMender that it mentioned detects, patches, and rewrites susceptible code to forestall future exploits. The tech large additionally famous that it intends to work with maintainers of important open-source initiatives to combine CodeMender-generated patches to assist hold initiatives safe.

Seen in that gentle, Aardvark, CodeMender, and XBOW are being positioned as instruments for steady code evaluation, exploit validation, and patch technology. It additionally comes shut on the heels of OpenAI’s launch of the gpt-oss-safeguard fashions which might be fine-tuned for security classification duties.

“Aardvark represents a brand new defender-first mannequin: an agentic safety researcher that companions with groups by delivering steady safety as code evolves,” OpenAI mentioned. “By catching vulnerabilities early, validating real-world exploitability, and providing clear fixes, Aardvark can strengthen safety with out slowing innovation. We consider in increasing entry to safety experience.”