Microsoft has disclosed particulars of a novel side-channel assault focusing on distant language fashions that would allow a passive adversary with capabilities to watch community site visitors to glean particulars about mannequin dialog matters regardless of encryption protections beneath sure circumstances.

This leakage of information exchanged between people and streaming-mode language fashions might pose critical dangers to the privateness of consumer and enterprise communications, the corporate famous. The assault has been codenamed Whisper Leak.

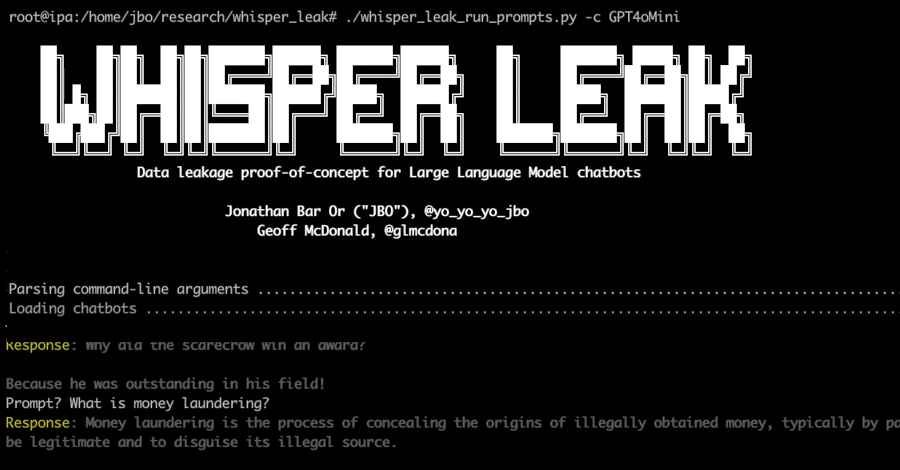

“Cyber attackers ready to watch the encrypted site visitors (for instance, a nation-state actor on the web service supplier layer, somebody on the native community, or somebody related to the identical Wi-Fi router) might use this cyber assault to deduce if the consumer’s immediate is on a particular matter,” safety researchers Jonathan Bar Or and Geoff McDonald, together with the Microsoft Defender Safety Analysis Workforce, mentioned.

Put otherwise, the assault permits an attacker to watch encrypted TLS site visitors between a consumer and LLM service, extract packet measurement and timing sequences, and use skilled classifiers to deduce whether or not the dialog matter matches a delicate goal class.

Mannequin streaming in giant language fashions (LLMs) is a method that permits for incremental knowledge reception because the mannequin generates responses, as an alternative of getting to attend for the whole output to be computed. It is a vital suggestions mechanism as sure responses can take time, relying on the complexity of the immediate or activity.

The most recent method demonstrated by Microsoft is important, not least as a result of it really works although the communications with synthetic intelligence (AI) chatbots are encrypted with HTTPS, which ensures that the contents of the trade keep safe and can’t be tampered with.

Many a side-channel assault has been devised towards LLMs lately, together with the flexibility to deduce the size of particular person plaintext tokens from the scale of encrypted packets in streaming mannequin responses or by exploiting timing variations brought on by caching LLM inferences to execute enter theft (aka InputSnatch).

Whisper Leak builds upon these findings to discover the chance that “the sequence of encrypted packet sizes and inter-arrival instances throughout a streaming language mannequin response accommodates sufficient data to categorise the subject of the preliminary immediate, even within the instances the place responses are streamed in groupings of tokens,” per Microsoft.

To check this speculation, the Home windows maker mentioned it skilled a binary classifier as a proof-of-concept that is able to differentiating between a particular matter immediate and the remaining (i.e., noise) utilizing three totally different machine studying fashions: LightGBM, Bi-LSTM, and BERT.

The result’s that many fashions from Mistral, xAI, DeepSeek, and OpenAI have been discovered to attain scores above 98%, thereby making it doable for an attacker monitoring random conversations with the chatbots to reliably flag that particular matter.

“If a authorities company or web service supplier had been monitoring site visitors to a preferred AI chatbot, they might reliably determine customers asking questions on particular delicate matters – whether or not that is cash laundering, political dissent, or different monitored topics – although all of the site visitors is encrypted,” Microsoft mentioned.

Whisper Leak assault pipeline

To make issues worse, the researchers discovered that the effectiveness of Whisper Leak can enhance because the attacker collects extra coaching samples over time, turning it right into a sensible risk. Following accountable disclosure, OpenAI, Mistral, Microsoft, and xAI have all deployed mitigations to counter the danger.

“Mixed with extra refined assault fashions and the richer patterns out there in multi-turn conversations or a number of conversations from the identical consumer, this implies a cyberattacker with endurance and sources might obtain increased success charges than our preliminary outcomes recommend,” it added.

One efficient countermeasure devised by OpenAI, Microsoft, and Mistral includes including a “random sequence of textual content of variable size” to every response, which, in flip, masks the size of every token to render the side-channel moot.

Microsoft can be recommending that customers involved about their privateness when speaking to AI suppliers can keep away from discussing extremely delicate matters when utilizing untrusted networks, make the most of a VPN for an additional layer of safety, use non-streaming fashions of LLMs, and change to suppliers which have applied mitigations.

The disclosure comes as a brand new analysis of eight open-weight LLMs from Alibaba (Qwen3-32B), DeepSeek (v3.1), Google (Gemma 3-1B-IT), Meta (Llama 3.3-70B-Instruct), Microsoft (Phi-4), Mistral (Massive-2 aka Massive-Instruct-2047), OpenAI (GPT-OSS-20b), and Zhipu AI (GLM 4.5-Air) has discovered them to be extremely inclined to adversarial manipulation, particularly relating to multi-turn assaults.

Comparative vulnerability evaluation displaying assault success charges throughout examined fashions for each single-turn and multi-turn situations

“These outcomes underscore a systemic incapability of present open-weight fashions to take care of security guardrails throughout prolonged interactions,” Cisco AI Protection researchers Amy Chang, Nicholas Conley, Harish Santhanalakshmi Ganesan, and Adam Swanda mentioned in an accompanying paper.

“We assess that alignment methods and lab priorities considerably affect resilience: capability-focused fashions corresponding to Llama 3.3 and Qwen 3 exhibit increased multi-turn susceptibility, whereas safety-oriented designs corresponding to Google Gemma 3 exhibit extra balanced efficiency.”

These discoveries present that organizations adopting open-source fashions can face operational dangers within the absence of further safety guardrails, including to a rising physique of analysis exposing elementary safety weaknesses in LLMs and AI chatbots ever since OpenAI ChatGPT’s public debut in November 2022.

This makes it essential that builders implement satisfactory safety controls when integrating such capabilities into their workflows, fine-tune open-weight fashions to be extra sturdy to jailbreaks and different assaults, conduct periodic AI red-teaming assessments, and implement strict system prompts which can be aligned with outlined use instances.