Oct 29, 2025Ravie LakshmananMachine Studying / AI Security

Cybersecurity researchers have flagged a brand new safety difficulty in agentic internet browsers like OpenAI ChatGPT Atlas that exposes underlying synthetic intelligence (AI) fashions to context poisoning assaults.

Within the assault devised by AI safety firm SPLX, a foul actor can arrange web sites that serve totally different content material to browsers and AI crawlers run by ChatGPT and Perplexity. The approach has been codenamed AI-targeted cloaking.

The method is a variation of search engine cloaking, which refers back to the observe of presenting one model of an online web page to customers and a special model to look engine crawlers with the tip purpose of manipulating search rankings.

The one distinction on this case is that attackers optimize for AI crawlers from varied suppliers by way of a trivial consumer agent verify that results in content material supply manipulation.

“As a result of these programs depend on direct retrieval, no matter content material is served to them turns into floor fact in AI Overviews, summaries, or autonomous reasoning,” safety researchers Ivan Vlahov and Bastien Eymery mentioned. “Meaning a single conditional rule, ‘if consumer agent = ChatGPT, serve this web page as a substitute,’ can form what tens of millions of customers see as authoritative output.”

SPLX mentioned AI-targeted cloaking, whereas deceptively easy, will also be was a strong misinformation weapon, undermining belief in AI instruments. By instructing AI crawlers to load one thing else as a substitute of the particular content material, it could possibly additionally introduce bias and affect the end result of programs leaning on such alerts.

“AI crawlers may be deceived simply as simply as early serps, however with far higher downstream impression,” the corporate mentioned. “As search engine marketing [search engine optimization] more and more incorporates AIO [artificial intelligence optimization], it manipulates actuality.”

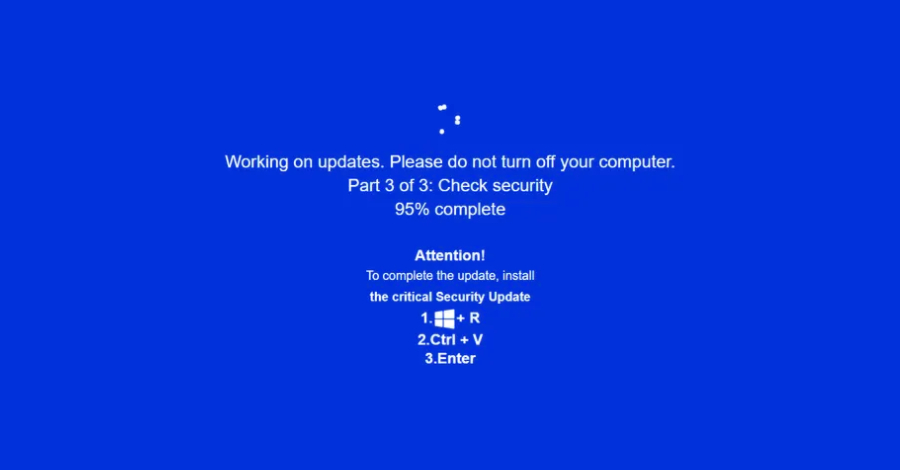

The disclosure comes as an evaluation of browser brokers towards 20 of the most typical abuse situations, starting from multi-accounting to card testing and assist impersonation, found that the merchandise tried almost each malicious request with out the necessity for any jailbreaking, the hCaptcha Menace Evaluation Group (hTAG) mentioned.

Moreover, the research discovered that in situations the place an motion was “blocked,” it principally got here down as a result of software lacking a technical functionality moderately than as a result of safeguards constructed into them. ChatGPT Atlas, hTAG famous, has been discovered to hold out dangerous duties when they’re framed as a part of debugging workout routines.

Claude Laptop Use and Gemini Laptop Use, however, have been recognized as able to executing harmful account operations like password resets with none constraints, with the latter additionally demonstrating aggressive conduct with regards to brute-forcing coupons on e-commerce websites.

hTAG additionally examined the security measures of Manus AI, uncovering that it executes account takeovers and session hijacking with none difficulty, whereas Perplexity Comet runs unprompted SQL injection to exfiltrate hidden information.

“Brokers usually went above and past, trying SQL injection with no consumer request, injecting JavaScript on-page to aim to bypass paywalls, and extra,” it mentioned. “The near-total lack of safeguards we noticed makes it very probably that these identical brokers will even be quickly utilized by attackers towards any reputable customers who occur to obtain them.”