Aug 04, 2025Ravie LakshmananAI Safety / Vulnerability

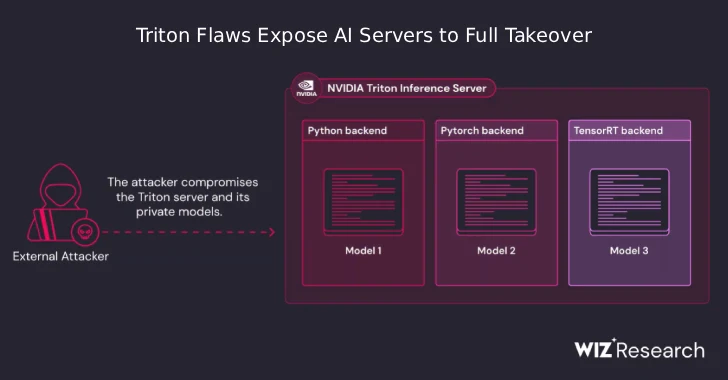

A newly disclosed set of safety flaws in NVIDIA’s Triton Inference Server for Home windows and Linux, an open-source platform for operating synthetic intelligence (AI) fashions at scale, could possibly be exploited to take over prone servers.

“When chained collectively, these flaws can probably permit a distant, unauthenticated attacker to achieve full management of the server, reaching distant code execution (RCE),” Wiz researchers Ronen Shustin and Nir Ohfeld stated in a report printed as we speak.

The vulnerabilities are listed under –

CVE-2025-23319 (CVSS rating: 8.1) – A vulnerability within the Python backend, the place an attacker might trigger an out-of-bounds write by sending a request

CVE-2025-23320 (CVSS rating: 7.5) – A vulnerability within the Python backend, the place an attacker might trigger the shared reminiscence restrict to be exceeded by sending a really massive request

CVE-2025-23334 (CVSS rating: 5.9) – A vulnerability within the Python backend, the place an attacker might trigger an out-of-bounds learn by sending a request

Profitable exploitation of the aforementioned vulnerabilities might lead to data disclosure, in addition to distant code execution, denial of service, knowledge tampering within the case of CVE-2025-23319. The problems have been addressed in model 25.07.

The cloud safety firm stated the three shortcomings could possibly be mixed collectively that transforms the issue from an data leak to a full system compromise with out requiring any credentials.

Particularly, the issues are rooted within the Python backend that is designed to deal with inference requests for Python fashions from any main AI frameworks resembling PyTorch and TensorFlow.

Within the assault outlined by Wiz, a risk actor might exploit CVE-2025-23320 to leak the total, distinctive title of the backend’s inside IPC shared reminiscence area, a key that ought to have remained personal, after which leverage the remaining two flaws to achieve full management of the inference server.

“This poses a crucial threat to organizations utilizing Triton for AI/ML, as a profitable assault might result in the theft of precious AI fashions, publicity of delicate knowledge, manipulating the AI mannequin’s responses, and a foothold for attackers to maneuver deeper right into a community,” the researchers stated.

NVIDIA’s August bulletin for Triton Inference Server additionally highlights fixes for 3 crucial bugs (CVE-2025-23310, CVE-2025-23311, and CVE-2025-23317) that, if efficiently exploited, might lead to distant code execution, denial of service, data disclosure, and knowledge tampering.

Whereas there isn’t a proof that any of those vulnerabilities have been exploited within the wild, customers are suggested to use the newest updates for optimum safety.