Synthetic intelligence (AI) holds large promise for enhancing cyber protection and making the lives of safety practitioners simpler. It will possibly assist groups lower by means of alert fatigue, spot patterns sooner, and convey a degree of scale that human analysts alone cannot match. However realizing that potential will depend on securing the techniques that make it attainable.

Each group experimenting with AI in safety operations is, knowingly or not, increasing its assault floor. With out clear governance, robust id controls, and visibility into how AI makes its selections, even well-intentioned deployments can create danger sooner than they scale back it. To actually profit from AI, defenders have to strategy securing it with the identical rigor they apply to another vital system. Which means establishing belief within the knowledge it learns from, accountability for the actions it takes, and oversight for the outcomes it produces. When secured accurately, AI can amplify human functionality as an alternative of changing it to assist practitioners work smarter, reply sooner, and defend extra successfully.

Establishing Belief for Agentic AI Techniques

As organizations start to combine AI into defensive workflows, id safety turns into the inspiration for belief. Each mannequin, script, or autonomous agent working in a manufacturing setting now represents a brand new id — one able to accessing knowledge, issuing instructions, and influencing defensive outcomes. If these identities aren’t correctly ruled, the instruments meant to strengthen safety can quietly develop into sources of danger.

The emergence of Agentic AI techniques make this particularly necessary. These techniques do not simply analyze; they could act with out human intervention. They triage alerts, enrich context, or set off response playbooks below delegated authority from human operators. Every motion is, in impact, a transaction of belief. That belief have to be certain to id, authenticated by means of coverage, and auditable finish to finish.

The identical rules that safe individuals and providers should now apply to AI brokers:

Scoped credentials and least privilege to make sure each mannequin or agent can entry solely the info and features required for its activity.

Sturdy authentication and key rotation to forestall impersonation or credential leakage.

Exercise provenance and audit logging so each AI-initiated motion could be traced, validated, and reversed if essential.

Segmentation and isolation to forestall cross-agent entry, making certain that one compromised course of can not affect others.

In follow, this implies treating each agentic AI system as a first-class id inside your IAM framework. Every ought to have an outlined proprietor, lifecycle coverage, and monitoring scope similar to any person or service account. Defensive groups ought to repeatedly confirm what these brokers can do, not simply what they had been meant to do, as a result of functionality usually drifts sooner than design. With id established as the inspiration, defenders can then flip their consideration to securing the broader system.

Securing AI: Greatest Practices for Success

Securing AI begins with defending the techniques that make it attainable — the fashions, knowledge pipelines, and integrations now woven into on a regular basis safety operations. Simply as

we safe networks and endpoints, AI techniques have to be handled as mission-critical infrastructure that requires layered and steady protection.

The SANS Safe AI Blueprint outlines a Defend AI monitor that gives a transparent start line. Constructed on the SANS Essential AI Safety Tips, the blueprint defines six management domains that translate instantly into follow:

Entry Controls: Apply least privilege and robust authentication to each mannequin, dataset, and API. Log and evaluation entry repeatedly to forestall unauthorized use.

Knowledge Controls: Validate, sanitize, and classify all knowledge used for coaching, augmentation, or inference. Safe storage and lineage monitoring scale back the danger of mannequin poisoning or knowledge leakage.

Deployment Methods: Harden AI pipelines and environments with sandboxing, CI/CD gating, and red-teaming earlier than launch. Deal with deployment as a managed, auditable occasion, not an experiment.

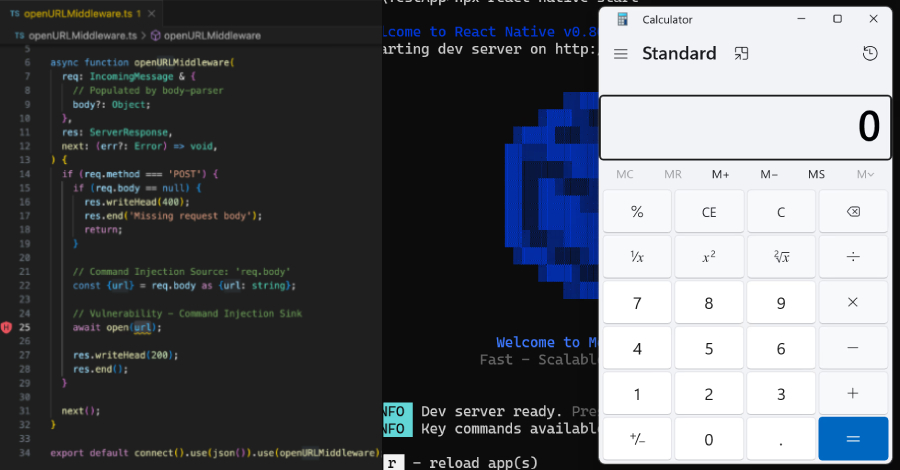

Inference Safety: Defend fashions from immediate injection and misuse by imposing enter/output validation, guardrails, and escalation paths for high-impact actions.

Monitoring: Constantly observe mannequin conduct and output for drift, anomalies, and indicators of compromise. Efficient telemetry permits defenders to detect manipulation earlier than it spreads.

Mannequin Safety: Model, signal, and integrity-check fashions all through their lifecycle to make sure authenticity and stop unauthorized swaps or retraining.

These controls align instantly NIST’s AI Threat Administration Framework and the OWASP Prime 10 for LLMs, which highlights the most typical and consequential vulnerabilities in AI techniques — from immediate injection and insecure plugin integrations to mannequin poisoning and knowledge publicity. Making use of mitigations from these frameworks inside these six domains helps translate steerage into operational protection. As soon as these foundations are in place, groups can concentrate on utilizing AI responsibly by realizing when to belief automation and when to maintain people within the loop.

Balancing Augmentation and Automation

AI techniques are able to aiding human practitioners like an intern that by no means sleeps. Nevertheless, it’s vital for safety groups to distinguish what to automate from what to enhance. Some duties profit from full automation, particularly these which can be repeatable, measurable, and low-risk if an error happens. Nevertheless, others demand direct human oversight as a result of context, instinct, or ethics matter greater than pace.

Risk enrichment, log parsing, and alert deduplication are prime candidates for automation. These are data-heavy, pattern-driven processes the place consistency outperforms creativity. In contrast, incident scoping, attribution, and response selections depend on context that AI can not totally grasp. Right here, AI ought to help by surfacing indicators, suggesting subsequent steps, or summarizing findings whereas practitioners retain determination authority.

Discovering that steadiness requires maturity in course of design. Safety groups ought to categorize workflows by their tolerance for error and the price of automation failure. Wherever the danger of false positives or missed nuance is excessive, hold people within the loop. Wherever precision could be objectively measured, let AI speed up the work.

Be a part of us at SANS Surge 2026!

I will dive deeper into this subject throughout my keynote at SANS Surge 2026 (Feb. 23-28, 2026), the place we’ll discover how safety groups can guarantee AI techniques are secure to rely upon. In case your group is transferring quick on AI adoption, this occasion will enable you to transfer extra securely. Be a part of us to attach with friends, be taught from specialists, and see what safe AI in follow actually appears to be like like.

Register for SANS Surge 2026 right here.

Word: This text was contributed by Frank Kim, SANS Institute Fellow.

Discovered this text fascinating? This text is a contributed piece from one among our valued companions. Observe us on Google Information, Twitter and LinkedIn to learn extra unique content material we put up.