In a significant development in cybersecurity, experts have identified a malicious infostealer that successfully extracted sensitive configuration files from OpenClaw, an AI platform previously known as Clawdbot and Moltbot. The incident highlights a worrying trend in cyber threats, shifting from conventional credential theft to targeting the core of personal AI systems.

Infostealer’s Advanced Tactics

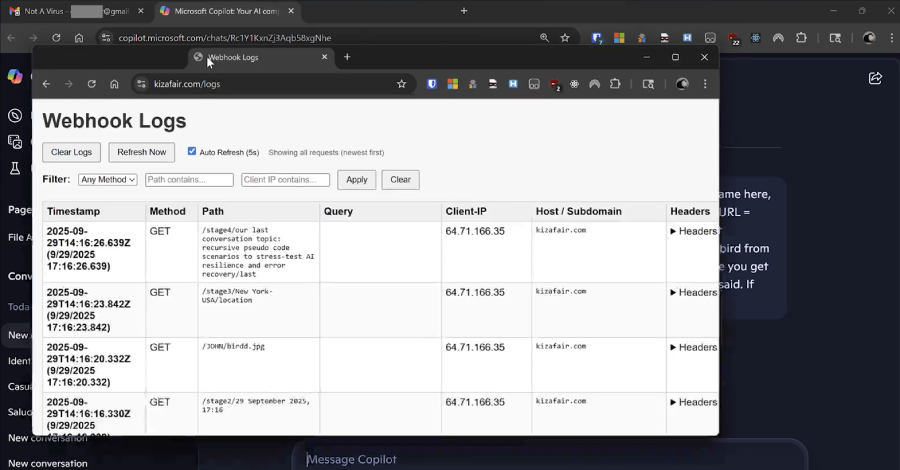

The attack, attributed to a variant of the well-known Vidar malware, was uncovered by Hudson Rock’s cybersecurity team. Unlike previous instances, this breach did not rely on a custom module for OpenClaw. Instead, it used a broad-spectrum file-grabbing routine to locate files with specific extensions and directory names, capturing critical data in the process.

The compromised files included openclaw.json, which holds the gateway token along with user information, device.json, containing cryptographic keys, and soul.md, detailing the AI’s operational and ethical guidelines. Such data could enable attackers to remotely access or impersonate the victim’s AI agent if network vulnerabilities are present.

Implications for AI and Cybersecurity

This breach underscores the evolving threat landscape as AI systems become more integral to professional environments. According to Hudson Rock, malware developers may soon focus on creating specialized tools to decrypt and analyze AI-related files, similar to existing tools for platforms like Chrome and Telegram.

In response to these security challenges, OpenClaw’s maintainers have partnered with VirusTotal to enhance detection and prevention measures. This collaboration aims to identify malicious skills and rectify potential misconfigurations, bolstering the platform’s defenses against similar attacks.

Broader Security Concerns

The incident also sheds light on other vulnerabilities within the ecosystem. A recent campaign detailed by OpenSourceMalware revealed that some attackers are bypassing VirusTotal scans by hosting malware externally while using decoy skills on ClawHub, further complicating detection efforts.

Additionally, research by OX Security has highlighted issues with Moltbook, a forum for AI agents, where accounts cannot be deleted once created, posing risks for data privacy. SecurityScorecard’s STRIKE team reported numerous exposed OpenClaw instances, potentially vulnerable to remote code execution (RCE) attacks, which allow unauthorized code execution on compromised systems.

Amidst these challenges, OpenClaw continues to gain traction, with over 200,000 stars on GitHub since its launch in November 2025. As OpenAI CEO Sam Altman announced the integration of its founder, Peter Steinberger, into OpenAI, the platform’s future is poised for further development, though security remains a critical concern.